What is Docker?

Docker is an open-source platform for developing, shipping, and running applications. It’s designed to make it easier to create, deploy, and run applications using containers. Containers allow a developer to package up an application with all the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

The key benefit of Docker is that it allows users to package an application with all its dependencies into a standardized unit for software development. This means that the application will run the same, regardless of the environment it is running in.

In software development, this is a significant advantage. It means that developers can focus on writing code without worrying about the system that it will be running on. It also allows them to work in the same environment, which greatly reduces the number of bugs that are introduced due to differences in environments.

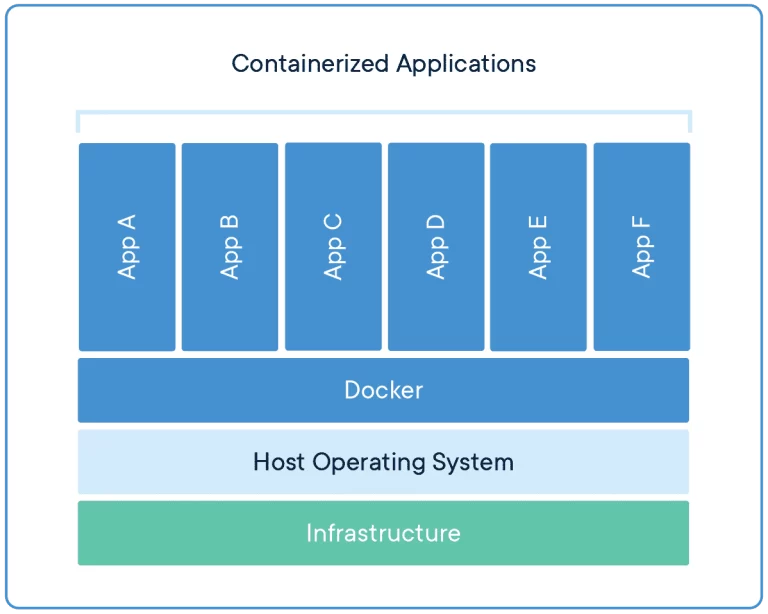

Docker containers are lightweight, meaning they use less system resources than traditional or hardware virtualization methods. This is because they don’t need a full operating system to run. Instead, they use the features of the host operating system.

Another advantage of Docker is its speed. Containers can be started almost instantly, which is a lot faster than starting a virtual machine. This makes it very efficient for development workflows and scaling applications.

Docker also has a large and active community, which means plenty of resources are available for learning and troubleshooting. Many pre-built images are available in the Docker Hub, which can be used as the starting point for your own images.

Key Features

Docker offers many features that make it a popular tool for developers and operations teams. Here are some of the key highlights:

Open-Source Platform: Docker is an open-source platform that enables developers to build, deploy, run, update, and manage containers. Containers are standardized, executable components that combine application source code with the operating system (OS) libraries and dependencies required to run that code in any environment.

Easy Delivery of Applications: Docker provides a way to rapidly and simply set up the system. Because of this functionality, codes may be input quickly and easily. Docker containers use a shared operating system and only include the necessary dependencies, making them much smaller and faster to start than traditional virtual machines.

Containerization: Docker’s core functionality revolves around containers. Containers are lightweight and self-contained environments that isolate your application and its dependencies from the underlying system. This ensures consistent behavior regardless of the environment the container runs on.

Segregation of Duties: Docker is intended to improve consistency by ensuring that the environment in which your developers write code is consistent with the environment in which your applications are deployed. This reduces the possibility of “worked in deployment, now an ops issue”.

Service-Oriented Architecture: Docker also supports service-oriented and microservice architectures. Docker suggests that each container runs only one application or process. This encourages the use of a distributed application model in which an application or service is represented by a collection of interconnected containers.

Faster Development and Deployment: Docker streamlines the development lifecycle by allowing developers to use containers in standardized environments. This means applications can be easily moved between development, testing, and production stages without worrying about environment inconsistencies.

Isolation and Security: Applications running in Docker containers are isolated from each other and the host system. This enhances security as a compromised container won’t affect other containers or the host.

Portability: Docker containers are portable across different environments as long as they have a Docker runtime. This makes it easy to deploy applications on various platforms like cloud servers, on-premises infrastructure, or even developer laptops.

Scalability: Scaling applications with Docker is simple. You can easily spin up new instances of your containerized application to handle increased load.

Image Management: Docker uses images to build containers. These images are essentially templates that define the container’s configuration and contents. Docker provides a registry for sharing and storing images, making collaboration and deployment easier.

Networking: Docker containers can be linked together to form a network, allowing them to communicate with each other. This simplifies the process of building complex multi-container applications.

Docker Compose: Docker Compose is a tool for defining and managing multi-container applications. It allows you to specify the configuration for all the containers in your application using a single YAML file. This streamlines the deployment and management of complex applications.

Volume Management: Docker volumes provide a mechanism for persistently storing and managing data in containers. A volume is a directory or a named storage location outside the container’s file system that is accessible to one or more containers.

Drawbacks

Docker is a popular tool for containerization, but like any technology, it has its drawbacks. Here are some of the disadvantages of Docker:

Outdated Documentation: Docker’s extensive documentation doesn’t always keep pace with platform updates. This can lead to confusion and difficulties when developers try to implement features or troubleshoot issues based on outdated information.

Learning curve: Docker can be complex for beginners, especially those unfamiliar with containerization concepts. There’s a new way of thinking about how applications are built and run.

Security Issues: Docker containers share the host system’s kennel. This means that if a container is compromised, the host system could also be compromised. Docker has some security features, but they may not be sufficient to prevent all types of attacks.

Performance Overhead: Compared to running directly on the system, Docker containers add a slight layer of overhead due to the isolation layer. It might not be ideal for ultra-high-performance applications.

Limited Orchestration: Docker does offer some automation features, but its capabilities for automation and orchestration are not as robust as other containerized platforms like Kubernetes. Without extensive orchestration, it can be difficult to manage multiple containers and environments at the same time.

Limited GUI support: Docker wasn’t designed for graphical applications. While workarounds exist, they can be clunky. Docker shines with server-side applications.

Storage complexities: Managing persistent data storage for containers can be tricky. While solutions exist, they might not be as seamless as traditional methods.

Containers Don’t Run at Bare-Metal Speeds: While containers consume resources more efficiently than virtual machines, they are still subject to performance overhead due to overlay networking, interfacing between containers and the host system, and so on. However, containers running on a bare-metal server are close to bare metal but do not run directly on bare metal.

What is Docterization?

Dockerization refers to the process of packaging an application and all its dependencies into a standardized unit called a Docker container. Docker is a popular open-source platform that allows developers to create and manage these containers.

Docterization includes:

Creating a Dockerfile: This is a text document that contains instructions for building a Docker image. It specifies the base operating system, necessary software packages, environment variables, and other configurations needed for the application to run.

Building a Docker Image: Using the Dockerfile as a blueprint, Docker builds a self-contained image that includes the application code and all its runtime dependencies. This image acts as a template for creating Docker containers.

Running a Docker Container: The Docker image can be used to create one or more containers. Each container is a running instance of the application isolated from other containers and the host system.

Dockerization offers several benefits for application development and deployment:

Portability: Docker containers can run on any system with Docker installed, regardless of the underlying operating system. This ensures consistent application behavior across different environments.

Isolation: Each container runs in its own isolated space, preventing conflicts between applications and enhancing security.

Efficiency: Docker containers are lightweight and share the host system’s kernel, making them resource-efficient.

Reproducibility: Dockerfiles ensure a consistent and predictable environment for your application, eliminating the “it works on my machine” problem.

In essence, Dockerization simplifies application development, deployment, and management by providing a standardized and portable way to package and run applications.

Use Cases

Docker is versatile and has a wide range of use cases across various domains. Here are some of the most common use cases:

Application Packaging and Deployment: Docker allows developers to package an application with all its dependencies into a Docker container. This container can be thought of as a standalone executable software package that includes everything needed to run the application. This ensures that the application will run on any other Linux machine regardless of any customized settings or installed libraries that machine might have.

Microservices Architecture: Docker is ideal for microservices architecture because it allows each service to run in its own container. This means that each service can be developed, deployed, and scaled independently of the others. This also allows for better resource allocation and isolation.

DevOps and Continuous Integration/Continuous Deployment (CI/CD): Docker containers provide consistent environments from development to production, which is a key requirement for CI/CD. This consistency eliminates the “it works on my machine” problem and allows developers to spend more time developing new features and less time fixing environment-related issues.

Isolation and Resource Efficiency: Docker containers are isolated from each other and from the host system. Each container has its own filesystem and networking and can be controlled to use a specific amount of resources. This means that you can run many containers on a single machine without them interfering with each other.

Hybrid and Multi-Cloud Deployments: Docker’s portability makes it a popular choice for hybrid and multi-cloud deployments. Docker containers can run on any infrastructure that supports Docker, whether it’s on-premises, in the public cloud, or in a hybrid cloud environment.

Scalability and Load Balancing: Docker can be used to handle increased load by simply starting more instances of an application. Docker containers can be easily started, stopped, and replicated, which makes scaling out to handle more traffic as simple as running a command.

Legacy application modernization: Docker can be used to package legacy applications into containers, making them more portable and easier to manage alongside modern applications.

Cloud deployments: Docker containers are perfect for cloud environments. They are lightweight and portable, making them ideal for scaling applications up or down based on demand.

Data science: Data scientists use Docker to create reproducible data analysis environments. All the necessary libraries and tools can be packaged into a container, ensuring consistent results across different environments.

Running multiple applications on a single server: Docker allows you to efficiently run multiple applications on a single server by isolating them in containers. This saves resources and simplifies server management.

Testing and QA: Docker provides a consistent environment for testing and QA. This means that you can be sure that your tests are running in the same environment where your application runs, which can reduce bugs and improve software quality.

Docker Pricing

Docker offers a freemium pricing model with tiers catering to individual developers, small teams, and large enterprises.

Free Personal Plan: This plan is free and is ideal for new developers or students who are just starting with containers. It provides Docker Desktop, which is a desktop application for building and sharing containerized applications. This plan also offers unlimited public repositories, meaning you can create and share as many collections of Docker images as you want. Additionally, you can download Docker images from Docker Hub up to 200 times in a 6-hour period, which is referred to as 200 image pulls per 6 hours. Lastly, this plan gives you access to Docker Engine for creating and managing containers, and Kubernetes for orchestrating and managing containerized applications.

Pro Yearly Plan: This plan is designed for professional developers looking to speed up their development process. It costs $5 per month and includes everything in the Personal plan, plus Docker Desktop Pro, which is an enhanced version of Docker Desktop with additional features like improved performance and support. This plan also provides unlimited private repositories, allowing you to create and share as many private collections of Docker images as you want. You also get a higher limit of 5,000 image pulls per day. Other benefits include 5 concurrent builds, Synchronized File Shares, Docker Debug, Local Scout analysis and remediation, and a 5-day support response time.

Team Yearly Plan: This plan is for development teams aiming to increase collaboration and agility. It costs $9 per user per month and includes everything in the Pro plan, plus Docker Desktop Teams, which is a version of Docker Desktop designed for team collaboration. This plan allows up to 100 users and unlimited teams. It also provides 15 concurrent builds, the ability to add users in bulk, audit logs, role-based access control, and a 2-day support response time. Included minutes and cache are 400/org/month and 100/GiB/org/month respectively.

Business Yearly Plan: This plan is for businesses seeking to establish an enterprise-grade development approach. It costs $24 per user per month and includes everything in the Team plan, plus Docker Desktop Business, which is a hardened version of Docker Desktop with additional security features. This plan also offers Single Sign-On (SSO), SCIM user provisioning, VDI support, Private Extensions Marketplace, Image and Registry Access Management, purchase via invoice, priority case routing, proactive case monitoring, and a 24-hour support response time. Included minutes and cache are 800/org/month and 200/GiB/org/month respectively.

Please note that Docker Desktop is free for small businesses (fewer than 250 employees AND less than $10 million in annual revenue), personal use, education, and non-commercial open-source projects. Otherwise, it requires a paid subscription for professional use. Paid subscriptions are also required for government entities.

Why Choose Docker?

Docker’s ability to work across multiple environments, platforms, and operating systems is one of its key advantages. This feature, known as cross-platform consistency, ensures that all members of a development team are working in a unified way, regardless of the server or machine they are using.

Another significant advantage of Docker is its serverless storage. Since Docker containers are cloud-based, they don’t require a lot of active memory to run reliably. This makes Docker a lightweight and efficient solution for many applications.

Docker also excels in deployment speed. By eliminating redundant installations and configurations, Docker allows for fast and easy deployment. This can significantly reduce the time it takes to get a new application or service up and running.

Flexibility and scalability are also key features of Docker. Docker allows developers to use any programming language and tools that suit their project. Additionally, Docker makes it easy to scale container resources up and down as needed. This means that as your application grows, Docker can easily accommodate the increased demand.

Docker’s efficient resource utilization is another reason for its popularity. Docker containers can be started within seconds, ensuring faster deployment. They also allow for efficient scaling and maintenance of containers.

Finally, Docker can help drive down costs by dramatically reducing infrastructure resources. This makes Docker a cost-effective solution for many organizations.

Workflow of Docker

Docker is an open platform for developing, shipping, and running applications. The Docker workflow typically involves the following stages:

Write the Code (and Test It): This is the initial stage where you write your application code. This could be in any language that Docker supports, which is virtually any language. You write your code using any IDE or text editor on your local machine. Testing your code thoroughly is important to ensure it works as expected before moving to the next step.

Build a Container Image: Once your code is ready, you need to create a Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build, you create a Docker image from your Dockerfile. This image includes everything needed to run your application – the code itself, runtime, libraries, environment variables, and config files. The Docker image is essentially a snapshot of the application and its environment.

Push the Image to the Server: After the Docker image is built, it’s pushed to a Docker registry. This could be Docker Hub, which is the default registry where Docker looks for images, or any other hosted registry service like Google Container Registry or Amazon Elastic Container Registry. The image is stored in the registry and can be pulled from there to any host machine that needs to run the application.

Restart the Application with the New Image: Finally, the application is restarted on the host machine, but now it’s running inside a Docker container. The Docker container is a running instance of the Docker image. You can have many containers running off the same image. Docker containers encapsulate everything needed to run the application, so they are highly portable and can run on any machine that has Docker installed.

This workflow is part of what makes Docker so powerful for developers. It allows for consistent environments from development to production, and the lightweight nature of containers makes it feasible to run your applications in isolated silos. This isolation reduces conflicts between your applications and allows you to get more out of your hardware.

How does Docker Work?

Docker is an open platform that allows you to automate the deployment, scaling, and management of applications within containers. These containers are lightweight and standalone packages that contain everything needed to run an application, including the code, runtime, system tools, libraries, and settings.

The creation of a Docker container begins with packaging an application and its dependencies. This virtual container can run on any Linux server, providing flexibility and portability across various infrastructures, whether on-premises, public cloud, private cloud, or bare metal.

One of Docker’s key features is its ability to isolate applications within containers. This isolation allows multiple containers to run simultaneously on a single host without interfering with each other, providing a secure environment for each application.

Containers are not only isolated but also portable. They contain everything needed to run an application, so they don’t rely on the host system’s configuration. This means you can run your code on any system that has Docker installed, making your applications highly portable.

Docker also provides tools to manage the lifecycle of your containers. You can develop your application and its supporting components using containers. The container becomes the unit for distributing and testing your application. When you’re ready, you can deploy your application into your production environment, either as a container or as an orchestrated service. This works the same whether your production environment is a local data center, a cloud provider, or a hybrid of the two.

Finally, Docker’s portability and lightweight nature make it easy to dynamically manage workloads. You can scale up or tear down applications and services as business needs dictate, in near real-time.

In summary, Docker streamlines the development lifecycle by allowing developers to work in standardized environments using local containers that provide your applications and services. It’s a powerful tool for both development and deployment, offering a very flexible and scalable solution for application management. It’s like having a mini-computer that you can start, stop, move, and delete as needed, all from your command line. It’s a game-changer for software development and deployment.

Architecture

Docker uses a client-server architecture. Here are the key components:

Docker Client: The Docker client, also known as the Docker CLI (Command Line Interface), is the primary user interface to Docker. It accepts commands from the user and communicates with the Docker daemon, which does the heavy lifting of building, running, and distributing Docker containers. The Docker client and daemon can run on the same host or you can connect a Docker client to a remote Docker daemon. The communication between the Docker client and daemon can happen over a network, a UNIX socket, or a Windows named pipe.

Docker Daemon: The Docker daemon, also known as dockerd, is a persistent process that manages Docker containers and handles container objects. The daemon listens for requests sent via the Docker Engine API. The daemon can manage images, containers, networks, and volumes.

Docker Host: The Docker host is the machine where the Docker daemon and Docker containers run. It provides the runtime environment for containers. The Docker host also includes Docker images, containers, networks, and storage.

Docker Registry: Docker Registry is a service that stores Docker images. It can be public or private. Docker Hub and Docker Cloud are public registries that anyone can use, and Docker is configured to look for images on Docker Hub by default. When you use the docker pull or docker run commands, the required images are pulled from your configured registry.

Docker Objects: Docker objects are various entities used to assemble an application in Docker. The main objects are images, containers, networks, and volumes.

- Images: Docker images are read-only templates that you build from a set of instructions written in a Dockerfile. Images define both what you want your packaged application and its dependencies to look like and what processes to run when it’s launched.

- Containers: A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

- Networks: Docker’s networking subsystem is pluggable, using drivers. Several network drivers exist out of the box and provide core networking functionality.

- Volumes: Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. While bind mounts are dependent on the directory structure of the host machine, volumes are completely managed by Docker.

Docker’s architecture is designed in a way that it can work in a highly distributed environment and scale up, allowing you to deploy complex applications with ease.

How to Install and Configure Docker?

Installing and configuring Docker depends on your operating system. Here’s a general guideline, but it’s recommended to refer to the official Docker documentation for detailed instructions specific to your system:

Check System Requirements: Docker Desktop for Windows requires Windows 10 64-bit: Home, Pro, or Enterprise version 1903 (Build 18362) or later. Docker uses features of the Windows kernel specifically for virtualization and containers. Therefore, older Windows versions, like Windows 8.1 and earlier, do not support Docker Desktop.

Download Docker Desktop Installer: Docker provides an installer for Windows that you can download from the Docker Hub. This installer includes all the dependencies and components needed for Docker to run.

Run the Installer: After downloading the installer, you can run it to install Docker. The installer provides an easy-to-use wizard to guide you through the installation process. By default, Docker Desktop is installed at C:\Program Files\Docker\Docker.

Enable WSL 2 Feature: Docker Desktop on Windows uses the Windows Subsystem for Linux version 2 (WSL 2) to run containers. Therefore, you need to enable the WSL 2 feature on your Windows system. You can do this by opening the Windows Features dialog (you can search for “Turn Windows features on or off” in the Start menu), checking the “Windows Subsystem for Linux” option, and then clicking OK.

Restart Your System: After enabling WSL 2 and installing Docker, you may need to restart your system for the changes to take effect.

Launch Docker Desktop: Once your system restarts, you can launch Docker Desktop from the Start menu or the system tray. When Docker Desktop is running, you should see a Docker icon in your system tray.

Test Docker Installation: To verify that Docker is installed correctly, you can open a command prompt or PowerShell window and run the command docker run hello-world. This command downloads a test image and runs it in a container. If the container runs successfully, Docker is installed and working correctly.

History

Docker is a set of platform-as-a-service (PaaS) products that leverage OS-level virtualization to deliver software in packages known as containers. The journey of Docker began in 2008 when it was founded as DotCloud by Solomon Hykes in Paris. Initially, it was a platform as a service (PaaS) before it pivoted in 2013.

On March 20, 2013, Docker was released as open-source. It was originally built as an internal tool to simplify the development and deployment of applications. Docker made its public debut at PyCon in Santa Clara in 2013. At that time, Docker used LXC as its default execution environment.

A significant change occurred in 2014 when Docker, with the release of version 0.9, replaced LXC with its own component, libcontainer, which was written in the Go programming language. This marked a shift in Docker’s underlying technology.

The software that hosts the containers is called Docker Engine. Docker is a tool designed to automate the deployment of applications in lightweight containers, ensuring that applications can work efficiently in different environments. Docker can package an application and its dependencies in a virtual container that can run on any Linux, Windows, or macOS computer. This flexibility allows the application to run in a variety of locations, such as on-premises, in public or private cloud. This has been a brief in-depth explanation of Docker’s history and functionality.

What’s New in Docker?

Docker Desktop has introduced a feature that enforces the usage of Rosetta via Settings Management. This means that Docker Desktop will now manage the use of Rosetta, Apple’s binary translation software, to ensure compatibility with M1 Macs.

The Docker socket mount restrictions with Ephemeral Compute Instances (ECI) are now generally available. This means that Docker has implemented security measures to restrict the mounting of the Docker socket within ECIs, enhancing the security of your Docker environment.

Docker Desktop has been upgraded to take advantage of the Moby 26 engine. This new engine includes Buildkit 0.13, which is a toolkit for converting source code to build artifacts in an efficient, expressive, and repeatable manner. It also includes sub-volume mounts, networking updates, and improvements to the containerd multi-platform image store user experience.

Docker Desktop has introduced new and improved error screens. These screens are designed to help users troubleshoot issues more quickly, easily upload diagnostics, and take remedial actions based on the provided suggestions.

Docker Compose now supports Synchronized file shares, which is currently in the experimental stage. This feature allows for real-time synchronization of files between the host and the containers.

A new interactive Compose Command Line Interface (CLI) has been introduced, which is also in the experimental stage. This new CLI provides a more interactive user experience when using Docker Compose.

Docker has released a beta version of several features. These include Air-gapped containers with Settings Management, which allows for the deployment of containers in environments without internet access. Host networking in Docker Desktop, which allows containers to use the host’s network stack. Docker Debug for running containers, which provides debugging capabilities for running containers. And finally, Volumes Backup & Share extension functionality, which is now available in the Volumes tab, allows users to backup and share Docker volumes.

Lastly, Docker has upgraded several components, including Docker Compose to v2.26.1, Docker Scout CLI to v1.6.3, Docker Engine to v26.0.0, Buildx to v0.13.1, Kubernetes to v1.29.2, cri-dockerd to v0.3.11, and Docker Debug to v0.0.27.

Docker in Cloud Computing

Docker is a set of Platforms-as-a-Service (PaaS) products that use operating system-level virtualizatio to deliver software in packages called containers. Here’s how Docker fits into cloud computing:

Containerization: Docker uses containerization technology. A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. Containers are isolated from each other and bundle their own software, libraries, and configuration files.

Portability: Docker containers are lightweight and portable. This means you can run a Docker image as a Docker container on any machine where Docker is installed, regardless of the operating system.

Efficiency and Scalability: Docker is known for its efficiency and scalability. It allows you to quickly deploy and scale applications into any environment and manage them with ease.

Integration with Cloud Services: Docker can be integrated with cloud platforms like AWS. This provides developers and admins with a highly reliable, low-cost way to build, ship, and run distributed applications at any scale. Docker collaborates with AWS to help developers speed delivery of modern apps to the cloud.

Microservices Architecture: Docker makes it easy to build and run distributed microservices architectures. It allows you to deploy your code with standardized continuous integration and delivery pipelines, build highly-scalable data processing systems, and create fully-managed platforms for your developers.

Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

Docker Swarm: Docker Swarm is a clustering and scheduling tool for Docker containers. With Swarm, IT administrators and developers can establish and manage a cluster of Docker nodes as a single virtual system.

Amazon ECS: Amazon Elastic Container Service (ECS) is a highly scalable, high-performance container orchestration service that supports Docker containers and allows you to easily run and scale containerized applications on AWS.

In summary, Docker in cloud computing is a tool that automates the deployment, scaling, and management of applications. It helps applications to work while they are being shifted from one platform to another, making Docker a key player in the world of cloud computing.

Competitors

Podman

Docker has been the industry standard for container management for a long time. It operates on a client-server architecture, which means it uses a persistent background process, known as the Docker daemon, to manage containers. This architecture allows Docker to provide all the necessary components for creating and running containers through its core application and integrated tools. Docker is particularly known for its user-friendly interface, extensive ecosystem, and seamless integration throughout the development-to-production cycle. If your application requires a widespread ecosystem and industry adoption, Docker is a strong choice.

On the other hand, Podman, developed by RedHat, is an open-source, daemonless, rootless container engine. Unlike Docker, Podman manages containers using the fork-exec model. This means that Podman is a process and does not use the client-server paradigm, so it does not require a daemon to run. This design allows Podman to use individual system components only when needed, making it more lightweight. Podman is known for its lightweight container management, advanced security features, simplified image handling, and ability to work with Docker images. If security is your top priority, Podman is the best choice, providing rootless and daemonless operations for containers.

Docker’s extensive ecosystem and industry adoption make it a strong choice for applications requiring a widespread ecosystem. Conversely, Podman’s advanced security features and lightweight container management make it a strong choice for security-focused applications.

Kubernetes

Docker is a platform that uses OS-level virtualization to deliver software in packages called containers. Each container is isolated from others and bundles its own software, libraries, and system tools. This ensures that the application runs quickly and reliably from one computing environment to another. Docker provides an additional layer of abstraction and automation of OS-level virtualization on Windows and Linux. It automates the deployment, scaling, and management of applications within containers.

On the other hand, Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Kubernetes provides a framework to run distributed systems resiliently. It takes care of scaling and failover for your applications, provides deployment patterns, and more.

While Docker focuses on the automation of deploying and managing applications within containers, Kubernetes is about the orchestration and coordination of containers across multiple hosts. Kubernetes can integrate with the Docker engine to coordinate the scheduling and execution of Docker containers on Kubelets.

In essence, Docker and Kubernetes are two different technologies that are often used together for containerized application development and deployment. Docker is used for creating and managing individual containers, while Kubernetes is used for managing clusters of containers at a larger scale. They are not direct competitors but rather complementary technologies. When deployed together, Docker and Kubernetes can provide applications with scalability, agility, and resiliency. They both play crucial roles in the continuous integration/continuous delivery (CI/CD) pipeline. So, they are more like two sides of the same coin rather than competitors. They work together to provide a complete containerization infrastructure.

Rkt

Rkt pronounced as “rocket”, is a command-line interface for running application containers on a Linux platform. It was designed with a focus on security, speed, and composability. One of the key features of Rkt is that it can be run without root privileges, which enhances its security. Additionally, it does not require a private registry for sharing files, which simplifies the process. The architecture of Rkt is modular, meaning it uses different modules to add features. This makes it flexible and adaptable to various needs. Furthermore, Rkt has a smaller codebase compared to Docker, which can make it easier to maintain and debug. It also offers a universal framework, which means it can be used in a variety of environments. In terms of performance, Rkt is generally considered to be faster than Docker. Lastly, Rkt is often praised for its security features, which are considered to be more robust than those of Docker.

On the other hand, Docker is an open-source platform used for building and managing containerized applications. Unlike Rkt, Docker cannot be run without root privileges, which can be a potential security concern. Docker also requires a private registry for sharing files. Docker does not have a modular architecture like Rkt. Instead, all of its features are added within a single program file. This can make Docker more monolithic and less flexible compared to Rkt. Docker has a larger codebase than Rkt, which can make it more complex to maintain and debug. Docker does not offer a universal framework like Rkt. In terms of performance, Docker is generally considered to be slower than Rkt. However, Docker has a larger ecosystem and a more user-friendly interface, which can make it more appealing to some users.

In summary, while Docker has a larger ecosystem and a more user-friendly interface, Rkt offers better security and a decentralized design philosophy. For instance, if security is a top priority, Rkt might be the better choice. On the other hand, if you’re looking for a solution with a large community and extensive resources, Docker might be more suitable.

Containerd

Docker is a comprehensive platform that packages applications and their dependencies into containers. It provides a standardized format for this process. Docker offers a wide range of features and tooling, making it a go-to solution for users who are looking for an all-in-one solution for container management. It simplifies the user experience by providing a way to manage and orchestrate containerized applications.

On the other side of the spectrum, we have Containerd. Containerd is a container runtime that focuses on simplicity, robustness, and portability. Unlike Docker, Containerd only focuses on the core runtime aspects. This focus provides simplicity and stability. Containerd is ideal for users who prefer a lightweight and modular container runtime. Interestingly, Docker uses Containerd as its runtime to pull images from image registries, create containers, manage storage and networking, and interact with containers.

When we compare Docker and Containerd, we need to consider their design and functionality, compatibility, performance, and use case.

Docker, with its extensive features and tooling, provides a complete container platform. In contrast, Containerd, with its focus on core runtime aspects, offers simplicity and stability.

In terms of compatibility, Containerd was split out of Docker in 2016. This was done to allow other container ecosystems like Kubernetes, AWS Fargate, and Rancher to use it.

Performance is another important factor to consider. Choosing the wrong container technology may lead to suboptimal performance, additional complexity, or insufficient features.

Lastly, the use case plays a crucial role in deciding between Docker and Containerd. If you’re a developer or part of a small team seeking a comprehensive containerization platform that’s easy to use, Docker might be the ideal choice. On the other hand, if you need a customizable container runtime, especially for integration into a larger container platform, Containerd provides the flexibility that suits your needs.

LXD

LXD and Docker are both technologies used for containerization, but they serve different purposes and are used in different contexts. LXD is a system container manager that was developed by Canonical, the company that is also behind Ubuntu Linux. It was designed to provide a more user-friendly way to create, manage, and run system containers on Linux-based systems.

System containers are different from application containers like Docker in that they are designed to encapsulate an entire operating system. This allows for a form of lightweight virtualization and efficient use of resources, enabling you to run many containers on a single host. Because of this, LXD is often compared to traditional hypervisors like XEN and KVM rather than Docker.

LXD containers are known for being fast and lightweight, which allows for the rapid deployment and scaling of applications. They also provide efficient use of system resources, which means you can run a large number of containers on a single host.

Docker, on the other hand, is a platform that provides a suite of tools for developing, deploying, and running containers. Unlike LXD, Docker focuses on the delivery of applications from development to production. Docker containers, also known as process containers, are designed to package and run a single process or service per container. They are typically used for running stateless types of workloads that are meant to be ephemeral.

Docker is typically used for deploying applications, while LXD is used for running system containers that mimic an entire operating system. If you’re looking to run an entire operating system in a container, LXD might be the better choice. If you’re looking to deploy a specific application in a container, Docker might be more suitable.

OpenShift

Docker is an open-source platform that has revolutionized the way we think about software deployment. It allows developers to package an application with all its dependencies into a standardized unit for software development. This unit, known as a container, can be deployed on any system that supports Docker, ensuring consistency across different environments. Docker also provides Docker Swarm, a native clustering and scheduling tool for Docker-based applications. Docker Swarm is lightweight and easy to use, making it suitable for small-scale container orchestration use cases.

On the other hand, OpenShift, developed by Red Hat, is a comprehensive enterprise-grade platform that provides a complete solution for managing containerized applications. It’s built on top of Docker and Kubernetes, the leading container orchestration platform. OpenShift extends Kubernetes by adding features such as multi-tenancy, continuous integration, and continuous deployment (CI/CD), and advanced security features. It’s designed to meet the operational needs of an entire organization, making it a good choice for large-scale, complex deployments.

While Docker and OpenShift both deal with containers, they serve different purposes and are not direct competitors. Docker is primarily used by developers to standardize their development workflows, while OpenShift is used by operations teams to manage containerized applications in production environments. In fact, Docker containers can run within OpenShift deployments, showing that these two technologies can complement each other.

When comparing Docker Swarm and OpenShift, Docker can be seen as an alternative to OpenShift for small-scale use cases. However, for larger scale and more complex deployments, OpenShift might be a more appropriate choice due to its advanced features and integrations.

Docker provides a flexible and lightweight platform for developing and running containerized applications, while OpenShift offers a robust and comprehensive solution for managing these applications at scale.

D2iQ

D2iQ and Docker are both prominent entities in the container management software market. They cater to slightly different needs and have distinct strengths. Based on user reviews, D2iQ has a rating of 3.8 out of 5 stars from 10 reviews, while Docker now known as Mirantis Kubernetes Engine, has a higher rating of 4.5 out of 5 stars from 279 reviews. This indicates that Docker might be more popular and better received among users.

However, it’s crucial to understand that the optimal choice between these two depends on your specific business needs. For example, reviewers felt that Docker is more suited to their business needs than D2iQ. But regarding the quality of ongoing product support, reviewers preferred D2iQ.

In terms of the direction of the product, D2iQ and Docker received similar ratings from reviewers. This suggests that both companies have a robust vision for the future of their products.

To sum up, while Docker may have a higher overall rating, D2iQ also has its strengths, particularly in terms of product support.

Companies Using Docker

JPMorgan Chase

JPMorgan Chase, one of the world’s largest banks, has been leveraging Docker and other modern data architectures to enhance their enterprise data platform. They have implemented a data mesh architecture, which is a novel way of managing and organizing data that aligns data technology with data products.

In this architecture, data products are defined by people who understand the data, its management requirements, permissible uses, and limitations. These data products are empowered to make management and use decisions for their data. This approach allows JPMorgan Chase to share data across the organization while maintaining appropriate control over it. They achieve this by enforcing decisions by sharing data, rather than copying it, and providing clear visibility of where data is being shared across the enterprise.

The data mesh concept organizes data architectures around business lines with domain context. This approach is fundamental to JPMorgan Chase’s data strategy, as it allows the business domain to own the data end-to-end, rather than going through a centralized technical team. A self-service platform is a key part of their architectural approach, where data is discoverable and shareable across the organization and ecosystem.

Product thinking is central to the idea of data mesh. In other words, data products will power the next era of data success. Data products are built with governance and compliance that is automated and federated. This ensures that data risks are managed, particularly in regulated industries like banking.

While the specific details of how Docker is used within this architecture are not publicly available, Docker is typically used in such scenarios to containerize applications, ensuring they run uniformly across different computing environments. This can greatly aid in the implementation of a data mesh architecture by facilitating the deployment and scaling of data products across the organization.

ThoughtWorks

ThoughtWorks, like many organizations, uses Docker as a key component in their transition to the cloud. Docker allows teams to develop an application in their testing environment and trust that it will run seamlessly in production. This means that a containerized application will run whether it’s in a private data center, the public cloud, or even a developer’s laptop.

The portability of containers also enables ThoughtWorks to scale their applications reliably. For instance, they could easily add more capacity ahead of a peak period, such as Black Friday, and scale down once the event is over.

However, managing containers can be challenging, and Docker is no exception. ThoughtWorks may need to make additional investments in container orchestration tools to get the most out of their use of Docker.

Spotify

Spotify, a leading music streaming service, has adopted Docker, a platform for containerization, to streamline their development and deployment procedures. Docker’s containerization reduces the chance of “it works on my machine” problems as the software operates uniformly in different situations. This standardization is crucial in Spotify’s architecture which spans across various contexts, from production servers to local development devices.

Docker provides an isolated and lightweight runtime environment through containers. Spotify optimizes resource consumption and prevents service conflicts by placing each component of their application and its dependencies into a separate container. This isolation also enhances security as each service operates within its own container, preventing interference from other services. This isolation is crucial to protect user data and ensure a safe streaming experience.

Docker’s ability to encapsulate dependencies allows Spotify developers to easily launch isolated instances of their apps. This accelerates the development and testing cycles, facilitating a more responsive and agile development workflow. Docker’s containerization greatly speeds up Spotify’s deployment process. Quick-starting containers minimize downtime and enable Spotify to release updates and new features more rapidly.

Spotify uses a microservices architecture to manage its complex ecosystem. Docker supports the independent deployment and scaling of individual microservices, providing the flexibility required to adapt to changing customer needs. Docker’s efficient use of system resources allows Spotify to maximize server utilization, ensuring a more cost-effective and scalable architecture. This efficiency leads to lower costs and improved overall performance.

Docker’s orchestration tools, such as Kubernetes and Docker Compose, simplify the management of Spotify’s complex infrastructure. Orchestration ensures a reliable service for millions of users through smooth scaling, load balancing, and high availability.

In summary, Spotify’s use of Docker is indicative of its innovative approach to infrastructure management and software development. It helps Spotify minimize environment drift by running all of their code in containers during development, production, or to train machine learning models.

Initially, the cloud management platform and site reliability engineering teams at Pinterest started to shift their workload from EC2 instances to Docker containers. This move included migrating more than half of their stateless services, including their entire API fleet. The adoption of a container platform brought several benefits. It improved developer velocity by eliminating the need to learn tools like Puppet. It also provided an immutable infrastructure for better reliability, increased agility to upgrade their underlying infrastructure, and improved the efficiency of their infrastructure.

Before the migration, services at Pinterest were authored and launched using a base Amazon Machine Image (AMI) with an operating system, common shared packages, and installed tools. For some services, there was a service-specific AMI built on the base AMI containing the service dependency packages. They used Puppet to provision cron jobs, infra components like service discovery, metrics, and logging, and their config files. However, this process had several pain points, such as engineers needing to be involved in the AMI build and learning various configuration languages.

To address these issues, Pinterest decided to migrate their infrastructure to Docker. They first moved their services to Docker to free up engineering time spent on Puppet and to have an immutable infrastructure. They are now in the process of adopting container orchestration technology to better utilize resources and leverage open-source container orchestration technology.

After dockerizing most of their production workloads, including the core API and Web fleets, by the first half of 2017, Pinterest started their journey on Kubernetes. They chose Kubernetes because of its flexibility and extensive community support. They built their own cluster bootstrap tools based on Kops and integrated existing infrastructure components into their Kubernetes cluster. They introduced Pinterest-specific custom resources to model their unique workloads while hiding the runtime complexity from developers.

In summary, Docker has played a significant role in Pinterest’s infrastructure, improving developer productivity, infrastructure efficiency, and service reliability. They have successfully migrated many of their services to Docker and are now leveraging Kubernetes for container orchestration. This journey has been a significant part of Pinterest’s growth and success.

Shopify

Shopify has adopted Docker to containerize its services, with the primary goal of enhancing the efficiency and adaptability of their data centers. Docker containers serve as the fundamental units that transform their data centers into predictable, fast, and easy-to-manage environments.

To successfully implement containerization, Shopify combines development and operations skills. They use Ubuntu 14.04 as their base image and avoid deviating from this base to prevent simultaneous battles with containerization and operating system/package upgrades.

In terms of container style, Docker provides a range of options, from ‘thin’ single-process containers to ‘fat’ containers. Shopify has chosen the ‘thin’ container approach, eliminating unnecessary components within the containers. This results in smaller, simpler containers that consume less CPU and memory.

For environment setup, Shopify employs Chef to manage their production nodes. They have set a goal to share a single copy of services like log indexing and stats collection on each Docker host machine, rather than duplicating these services in every container.

When it comes to deployment, all Shopify app templates include a Dockerfile with instructions for deploying a web app in a Docker container. To streamline the deployment process, they suggest choosing a hosting provider that supports Docker-based deployment.

In summary, Docker plays a crucial role in Shopify’s infrastructure, enabling them to create containers that power over 100,000 online shops. It has transformed their data centers into more manageable and adaptable environments and has simplified the deployment process.

Netflix

Netflix has adopted Docker containers as a key part of its infrastructure due to their flexibility and efficiency. The company runs a diverse and dynamic set of applications on its cloud platform, including streaming services, recommendation systems, machine learning models, media encoding, continuous integration testing, and big data analytics. Docker containers allow Netflix to run these applications on any infrastructure, regardless of the underlying operating system, hardware, or network. This flexibility also enables Netflix to deploy applications faster and more frequently, as Docker containers can be easily built, tested, and shipped across different environments.

One of the primary use cases for Docker containers at Netflix is batch processing. Batch processing involves executing jobs that run on a time or event-based trigger, performing tasks such as data analysis, computation, and reporting. Netflix runs hundreds of thousands of batch jobs every day, using various tools and languages such as Python, R, Java, and bash scripts. To schedule and run these batch jobs, Netflix uses Titus, its own container management system. Titus provides a common resource scheduler for container-based applications, independent of the workload type, and integrates with higher-level workflow schedulers.

Titus is a significant part of Netflix’s container story. It serves as the infrastructural foundation for container-based applications at Netflix. Titus provides Netflix scale cluster and resource management as well as container execution with deep Amazon EC2 integration and common Netflix infrastructure enablement. Netflix has achieved a new level of scale with Titus, launching over one million containers per week. Titus also supports services that are part of Netflix’s streaming service customer experience.

Netflix has implemented multi-tenant isolation using a combination of Linux, Docker, and their own isolation technology. This isolation covers CPU, memory, disk, networking, and security. For containers to be successful at Netflix, they needed to integrate them seamlessly into their existing developer tools and operational infrastructure.

In conclusion, Docker has become an integral part of Netflix’s infrastructure. It provides the flexibility, efficiency, and scalability needed to support their diverse set of applications.

Nubank

Nubank, a Brazilian fintech startup founded in 2013, has been a pioneer in the adoption of Docker containers and has run almost all of its infrastructure on AWS. As the company grew, reaching a customer base of 23 million and expanding its engineering team from 30 to over 520, it began to face challenges with its immutable infrastructure. The deployment process, which relied on spinning up an entire stack or cloning the entire infrastructure for each iteration of development, became increasingly slow and cumbersome. Other issues included load balancing for applications and difficulties in adding new security group rules in AWS.

Despite these challenges, Nubank recognized the benefits Docker had brought to their operations and decided to further invest in containerization. The next step was to find an orchestrator. Although Nubank’s data infrastructure team had been running a Spark cluster on top of Mesos and had considered several different technologies including Docker Swarm, they ultimately chose Kubernetes.

When Nubank began its migration to Kubernetes, their primary goal was to empower developers to run their applications. The cloud native platform at Nubank also includes Prometheus, Thanos, and Grafana for monitoring, and Fluentd for logging. This transition has significantly improved the developer experience, reducing deployment time from 90 minutes to just 15 minutes for production environments. Today, Nubank engineers are deploying 700 times a week. Additionally, the team estimates that Nubank has achieved about 30% cost efficiency through this migration.

Robinhood

Robinhood, a popular financial services company, leverages Docker, a platform that packages applications into containers, for various critical DevOps tasks. One of the key uses is for integration testing.

In this process, Robinhood creates a Docker image for each type of service that needs to be run. These images are like lightweight, standalone, executable software packages that include everything needed to run a piece of software. Databases are pre-migrated and sometimes loaded with initial data to speed up the time it takes to run each integration test. Each service has its own container, and different services can sometimes reuse the same underlying image.

Robinhood also writes mock services for third-party services. This is done by putting some basic code into a Python file and then running it with a standard lib Python image. The Python file can just be mounted into the container when run.

To declare the service infrastructure for each test case, Robinhood uses Docker-Compose, a tool for defining and running multi-container Docker applications. This is done in a YAML file, making describing each test case much more concise. It also makes error cases, where a service is down, much easier to add.

Robinhood exposes endpoints for the end-to-end tests. This allows external testing code to hit the endpoints and check for the expected behavior.

Finally, Robinhood starts up the infrastructure with Docker-Compose and simply makes API requests to the exposed endpoints. Sometimes additional checks may need to be added to make sure the interaction is actually happening.

An example of this setup is a basic integration test, checking an interaction between a sample Django app and Vault. The test case takes around 4 seconds to run, which involves setting up the entire infrastructure, running the tests, and tearing it down.

In summary, Docker provides a much faster alternative to spinning up multiple services compared to starting up multiple VMs. The interactions between services involved in Robinhood’s critical systems like market data and order execution are all tested this way. This approach ensures that the systems are robust and reliable, which is crucial for a financial services company like Robinhood.

Technologies, Frameworks, and Languages Used

Docker, while built on a single language, uses a combination of technologies to function and plays nicely with various programming languages for application development and deployment.

Linux Kernel Features: Docker relies on the kernel features of the Linux operating system, specifically control groups (cgroups) and namespaces, to isolate containers and provide resource management. These features allow Docker to allocate CPU, memory, and other resources to each container.

containerd: This is a low-level runtime used by Docker to manage the lifecycle of containers. It provides the building blocks for creating, starting, stopping, and deleting containers.

runc: This is a userspace specification that defines how a container runtime should operate. Docker uses runc to ensure that containers are portable and can run on any system that supports the OCI specification.

Image format: Docker uses a layered image format to store container images. This format allows for efficient storage and sharing of images, as only the differences between layers need to be stored.

While Docker itself isn’t built on a specific language, it can be used in conjunction with various programming languages to develop and deploy applications. Some of the most popular languages used with Docker include:

- Python

- Java

- Go

- Node.js

- Ruby

- PHP

These languages can be used to create the applications that will be packaged into Docker containers. There are also many frameworks and libraries available for these languages that can simplify the process of developing and deploying containerized applications.