What is Azure Event Hubs?

Azure Event Hubs is a fully managed real-time data ingestion service provided by Microsoft Azure. It is designed to receive and process large volumes of events generated by applications, devices, or services. Let’s explain each of these in detail:

- This is a service provided by Microsoft Azure. It is part of Azure’s big data ecosystem and is designed to handle real-time data streaming.

- Azure Event Hubs is fully managed, which means that Microsoft Azure handles all the maintenance, updates, and infrastructure management. Users don’t need to worry about the underlying hardware or software and can focus on their data and applications.

- Azure Event Hubs provides real-time data ingestion service means that it is designed to take in, or “ingest”, data as it is produced, in real-time. This is useful for scenarios where you need to process and analyze data as soon as it’s generated.

- Event Hubs is designed to handle massive amounts of data, potentially coming from millions of devices or applications. An “event” in this context is a piece of data or a signal that something has happened, like a click on a website or a temperature reading from a sensor.

- Event Hubs can ingest data from virtually any source, whether it’s an application logging user activity, a device like a sensor in an IoT scenario, or a service like a cloud-based software application.

Key Capabilities

Azure Event Hubs is a scalable event processing service that offers several key capabilities:

High Throughput and Low Latency: Azure Event hubs can handle a large amount of data and events almost instantly. This means it can receive and process a lot of information quickly and efficiently.

Apache Kafka Support: Apache Kafka is a popular open-source platform for handling real-time data feeds. Azure Event Hubs is compatible with Kafka, which means you can use Kafka-based applications with Event Hubs.

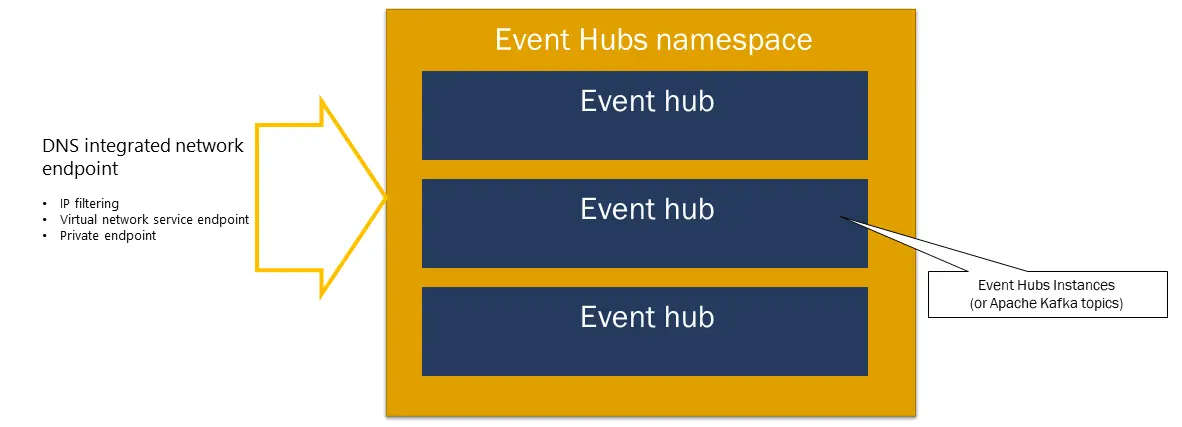

Namespace: In Azure Event Hubs, a namespace is like a container that holds your event hubs. It provides network endpoints that are integrated with DNS and offers features for managing access control and network integration.

Event Publishers: These are entities that send data to an event hub. They can use HTTPS, AMQP 1.0, or the Kafka protocol to publish events.

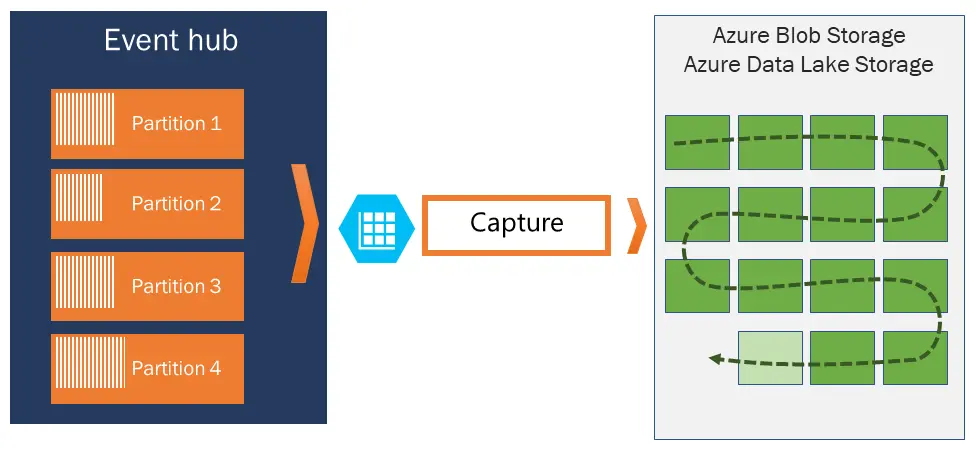

Capture: This feature of Azure Event Hubs allows automatic batching and archiving of events. It also provides automatic scaling and balancing, disaster recovery, cost-neutral availability zone support, flexible and secure network integration, and multi-protocol support including the firewall-friendly AMQP-over-WebSockets protocol.

Real-Time Processing: Azure Event Hubs can be used with Azure Stream Analytics for real-time stream processing. This means you can analyze and process your data as soon as it arrives.

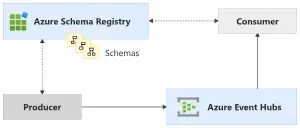

Schema Registry: This is a centralized repository in Event Hubs for managing schemas of events streaming applications. It ensures that data is compatible and consistent across event producers and consumers.

Scalability: Azure Event Hubs is designed to accommodate growth in data flow. It can scale both horizontally (across different systems) and vertically (within a single system) to handle more data.

Key Features

Real-Time Data Ingestion: Azure Event Hubs can ingest millions of events per second from any source. This allows you to create data pipelines and respond to business challenges in real-time.

Scalability: Azure Event Hubs can dynamically adjust throughput based on your usage needs. This means it can handle both small and large volumes of data easily.

Security: Azure Event Hubs is certified by multiple security standards like CSA STAR, ISO, SOC, GxP, HIPAA, HITRUST, and PCI. This ensures that your real-time data is protected.

Integration with Apache Kafka: If you have existing Apache Kafka clients and applications, they can communicate with Azure Event hubs without any code changes. This makes it easier to integrate Azure Event Hubs into your existing systems.

Geo-Disaster Recovery and Geo-Replication: These features ensure your data processing can continue even during emergencies. This is crucial for maintaining business continuity.

Schema Registry: This is a centralized repository for managing schemas of event streaming applications using the same data.

Azure Event Hubs is commonly used in scenarios like real-time analytics, big data pipelines, and event-driven architectures. It integrates seamlessly with other Azure services, allowing you to unlock valuable insights from your data.

How Do Azure Event Hubs Works?

Azure Event Hubs can take in a massive amount of data from various sources simultaneously. This is like a post office accepting letters from all over the city. You can set up an “event hub” on Azure, which is like your personal post box. You can send data to this post box using internet protocols like HTTPS and AMQP.

Once your data is in the event hub, it is stored and ready to be processed. This is similar to sorting the letters in the post office. You can process this data in real-time or in small batches at your own pace.

Azure Event Hubs can work together with other Azure services. For example, you can use Azure Stream Analytics to analyze the data you have collected in real-time. This is like having a team of analysts at the post office to make sense of the letters’ contents.

Azure Event Hubs can handle any amount of data, from a few megabytes to several terabytes. It is also designed to keep working even during emergencies, thanks to features like geo-disaster recovery and geo-replication.

If you are already using Apache Kafka (a popular open-source event streaming platform), you can use Azure Event Hubs without changing your code. This is like being able to use your old post box key at a new post office.

Azure Event Hubs is certified by several international standards for data protection. This ensures that your data is safe and secure, like having a lock on your post box.

Pricing

Azure Event Hubs pricing varies depending on the type of tier you choose. Here are the details:

Basic Tier

- Capacity: $0.015/hour per Throughput Unit

- Ingress events: $0.028 per million events

- Max Retention Period: 1 day

- Storage Retention: 84 GB

Standard Tier

- Capacity: $0.03/hour per Throughput Unit

- Ingress events: $0.028 per million events

- Capture: $73/month per Throughput Unit

- Max Retention Period: 7 days

Premium Tier

- Capacity: $1.233/hour per Processing Unit (PU)

- Ingress events: Included

- Capture: Included

- Max Retention Period: 90 days

- Storage Retention: 1 TB per PU

- Extended Retention: $0.12/GB/month (1 TB included per PU)

Dedicated Tier

- Capacity: $6.849/hour per Capacity Unit (CU)

- Ingress events: Included

- Capture: Included

- Max Retention Period: 90 days

- Storage Retention: 10 TB per CU

- Extended Retention: $0.12/GB/month (10 TB included per CU)

Please note that these prices are estimates and actual pricing may vary depending on the type of agreement entered with Microsoft, the date of purchase, and the currency exchange rate. For more accurate pricing based on your current program/offer with Microsoft, you can sign in to the Azure pricing calculator. For additional questions, you can contact an Azure sales specialist.

Apache Kafka on Azure Event Hubs

Azure Event Hubs provides a Kafka endpoint that allows you to connect to the event hub using the Kafka protocol. This means you can stream data from your Apache Kafka applications into Azure Event Hubs without needing to set up your own Kafka cluster. In many cases, you can use the Kafka endpoint in your applications without changing any code. You just need to update your application’s configuration to point to the Kafka endpoint provided by your event hub.

Once your application is configured to point to the Kafka endpoint, you can start streaming events into the event hub. In this context, event hubs are equivalent to Kafka topics. Event Hubs for the Kafka ecosystem support Apache Kafka version 1.0 and later.

Unlike Apache Kafka, which you need to install and operate for yourself, Event Hubs is a fully managed service in the cloud. This means there are no servers, disks, or networks for you to manage or monitor.

In Event Hubs, you create a namespace, which is an endpoint with a fully qualified domain name. Within this namespace, you create Event Hubs, which are equivalent to topics in Kafka.

Event Hubs uses a single stable virtual IP address as the endpoint. This means your clients don’t need to know about the individual brokers or machines within a cluster. The scale in Event Hubs is controlled by how many throughput units (TUs) or processing units you purchase.

Conceptual Mapping of Apache Kafka and Azure Event Hubs

Conceptually, Apache Kafka and Azure Event Hubs are very similar. They are both partitioned logs built for streaming data, whereby the client controls which part of the retained log it wants to read. Here is a conceptual mapping between Kafka and Event Hub:

|

Kafka Concept |

Event Hubs Concept |

|

Cluster |

Namespace |

|

Topic |

An event hub |

|

Partition |

Partition |

|

Consumer Group |

Consumer Group |

|

Offset |

Offset |

Key Differences Between Apache Kafka and Azure Event Hubs

Apache Kafka and Azure Event Hubs are both designed for handling real-time data feeds, but they have some key differences:

|

Feature |

Apache Kafka |

Azure Event Hubs |

|

Managed Service |

Self-managed |

Fully managed |

|

Integration with ecosystem |

Platform-agnostic |

Native integration with Azure services |

|

Scale Control |

Depends on the setup |

Controlled by throughput units (TUs) or processing units |

|

Consumer Groups |

Standard Kafka consumer groups |

Distinct from standard Event Hubs consumer groups |

|

Security and Authentication |

Requires firewall access for all brokers of a cluster |

Uses a single stable virtual IP address as the endpoint |

What is Azure Schema Registry?

Azure Schema Registry is like a library where you can store and manage schemas. A schema is a blueprint that defines how data is organized. It is used in applications that deal with data exchange, like event-driven or messaging-centric applications.

Normally, if two applications want to exchange data, they need to agree on a schema. But with Azure Schema Registry, they can just refer to the schema in the registry, making it easier to manage.

Azure Schema Registry provides a way to manage and reuse schemas. It also allows you to define relationships between different schemas. Some serialization frameworks, like Apache Avro, use schemas to serialize data. By storing the schema in Azure Schema Registry, you can reduce the amount of data that needs to be sent with each message.

Because the schemas are stored in Azure Schema Registry, they’re always available when you need them for serialization or deserialization. This means you don’t have to worry about losing or misplacing them. The Azure Schema Registry feature is not available in the basic tier of Azure Event Hubs. You would need to upgrade to a higher tier to use it.

Terminologies

Namespace

In the context of Azure Event Hubs, a namespace is like a container that holds one or more event hubs. It is a way to group related event hubs together. It provides DNS-integrated endpoints and a range of access control and network integration management features such as IP filtering, virtual network service endpoint, and Private Link. DNS-integrated endpoints are network addresses that are integrated with the Domain Name System (DNS). This means you can access the event hubs in a namespace using a human-readable address, rather than an IP address.

Access control and network integration management are security features provided by Azure. For example, IP filtering allows you to control access to your event hubs based on IP address. Virtual network service endpoint and Private Link are features that allow you to securely connect your Azure resources.

If you are familiar with Apache Kafka, an open-source event streaming platform, you can think of event hubs as equivalent to Kafka topics. Most existing Kafka applications can be reconfigured to use an Event Hub namespace.

In terms of deployment, the namespaces/eventhubs resources type can be deployed with operations that target resource groups. This refers to the process of setting up your event hubs on Azure. The namespaces/eventhubs resources type indicates that event hubs are a type of resource that can be deployed within a namespace.

Event Publishers

In Azure Event Hubs, an event publisher is an entity that sends data to an event hub. It’s also known as an event producer. Event publishers can send data using different protocols such as HTTPS, AMQP 1.0, or the Kafka protocol.

To publish data, event publishers need to be authorized. They can use Microsoft Entra ID-based authorization with OAuth2-issued JWT tokens or an Event Hub-specific Shared Access Signature (SAS) token.

Events can be published via AMQP 1.0, the Kafka protocol, or HTTPS. Azure provides REST API and client libraries in various programming languages (.NET, Java, Python, JavaScript, and Go) for this purpose. For other platforms, any AMQP 1.0 client, such as Apache Qpid, can be used.

The choice between AMQP and HTTPS depends on the specific use case. AMQP requires a persistent bidirectional socket and transport level security (TLS) or SSL/TLS. It has higher network costs when initializing the session, but it offers higher performance for frequent publishers and can achieve lower latencies when used with asynchronous publishing code. On the other hand, HTTPS requires extra TLS overhead for every request.

Publishing an Event

First, a connection is established with the Azure Event Hubs service using a connection string. This connection string contains the necessary information for authentication and can be found in your Azure portal. An Event Hub Producer client is created using the connection string and the name of the Event Hub. This client is responsible for sending events to the Event Hub.

A batch is created using the producer client. A batch is a collection of events that are sent to the Event Hub together. Batching events together can improve efficiency and throughput. Events are added to the batch. An event is essentially a piece of data or a message that you want to send. In this case, three events are added to the batch.

The batch of events is sent to the Event Hub using the producer client. This is an asynchronous operation, meaning it runs in the background and doesn’t block other operations. A confirmation message is printed to the console once the batch of events has been successfully published.

This process allows you to send multiple events to the Azure Event Hubs service efficiently and reliably.

Event Retention

Event Retention in Azure Event Hubs refers to how long the events (data) are kept in the system before they are automatically deleted. This duration is configurable and applies to all partitions in the Event Hub.

The default value and shortest possible retention period is 1 hour. Currently, you can set the retention period in hours only in the Azure portal. Resource Manager template, PowerShell, and CLI allow this property to be set only in days.

In the standard tier of Azure Event Hubs, the maximum duration you can set for event retention is 7 days. This means that any event that enters the Event Hub will be available for processing for up to 7 days, after which it will be deleted.

In the premium and dedicated tiers of Azure Event Hubs, you can set the event retention period up to 90 days. This means that events will be available for processing for up to 90 days, after which they will be deleted.

It’s important to note that if you change the retention period, it applies to all events, including those that are already in the Event Hub. However, Event Hubs are not meant to be a permanent data store. Retention periods greater than 24 hours are typically used in scenarios where it’s useful to replay a stream of events into the same systems.

Publisher Policy

In Azure Event Hubs, Publisher Policy is a runtime feature designed to facilitate a large number of independent event publishers. Here’s a brief overview:

Unique Identifier: Each publisher has its own unique identifier when publishing events to an event hub. This is a unique name that each publisher uses when they send events to an event hub. Think of it as a unique name tag that identifies each publisher.

HTTP Mechanism: This is the method used by publishers to send events. They use a specific URL format (http://<my namespace>.servicebus.windows.net/<event hub name>/publishers/<my publisher name>) that includes their unique identifier. It is like their own personal mailbox in the event hub.

Granular Control: This means that you can have fine-tuned control over each individual publisher. For example, you can set different permissions or rules for each publisher.

SAS-Based Publisher Policy: SAS stands for Shared Access Signature. It is a type of security mechanism in Azure. In this context, each publisher is given a SAS token that authorizes them to publish events. This token is time-bound, meaning it expires after a certain period.

Use in IoT: In IoT scenarios, each device can be considered as a publisher with a unique identifier. This makes the Publisher Policy particularly useful in IoT scenarios.

Capture

Capture is a feature of Azure Event Hubs. It automatically saves the data that streams through Event Hubs to an Azure Blob Storage or Azure Data Lake Storage Gen 1 or Gen 2 account that you choose. Here are some key points about it:

- You can set a specific time or size interval for the capture. For example, you could tell it to save the data every 15 minutes or every time it accumulates 50MB of data.

- Setting up Capture is quick and easy, and it doesn’t require any additional administrative costs. It also scales automatically with your Event Hubs throughput units (TUs), which means it can handle as much data as you need it to. It is the simplest way to load streaming data into Azure.

- Once the data is captured, you can process it in real-time or in batches. This means you can analyze the data as it comes in, or you can store it and analyze it later.

- The storage account where you save the captured data must be in the same subscription as the event hub. Also, Event Hubs doesn’t support capturing events in a premium storage account.

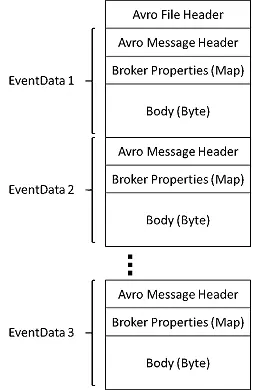

- The captured data is written in Apache Avro format, a binary data format. If you are using Azure Data Lake Storage Gen2, you can also capture the data in Parquet format, a columnar storage file format.

- You can configure the capture settings in the Azure portal when you create an event hub or for an existing event hub.

The files produced by Event Hubs Capture have the following Avro schema:

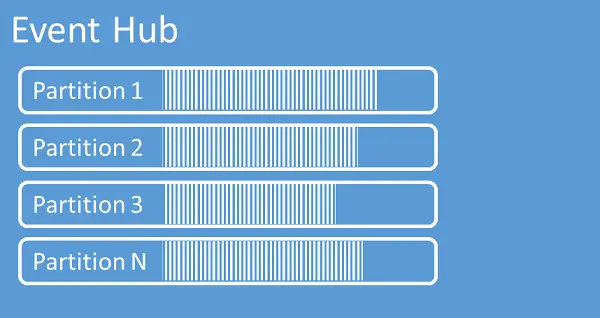

Partitions

Azure Event Hubs uses a partitioning model to process multiple events at the same time while keeping the order of events intact. This model helps in distributing the load and scaling the system.

When data producers send events to the pipeline, these events are distributed among different partitions. The order of events is maintained within each partition, but not across different partitions. The number of partitions can influence the throughput, which is the amount of data that can be processed by the system in a given time.

Partitions are part of event streams, which are named collections of events. In Azure Event Hubs, these streams are referred to as event hubs.

A consumer is an entity that reads a specific subset of the event stream, which can span multiple partitions. However, each partition can be assigned to only one consumer at a time. A consumer group is a collection of consumers. When a consumer group subscribes to a topic, each consumer in the group gets a separate view of the event stream. Consumers use a process called checkpointing to keep track of their position within a partition event sequence. They use offsets (markers) for this purpose.

Each partition holds event data, which includes the event body, a set of user-defined properties describing the event, and metadata such as its offset in the partition, its sequence number in the stream, and the timestamp when it was accepted by the service.

SAS Tokens

Shared Access Signatures, or SAS, are a type of security token used in Azure Event Hubs. They are a way to delegate access to Event Hubs resources without sharing your account keys. This is done by creating a signature string that includes specific information, such as the resource URI, the permissions granted, and the token expiry time. This string is then signed with a key that is associated with the resource’s authorization rule.

Generating SAS Tokens

A SAS token is generated by a client who has access to the name of an authorization rule and one of its signing keys. The authorization rule defines the rights that are granted by the SAS, and the signing key is used to cryptographically sign the token. This ensures that the token is valid and hasn’t been tampered with.

Control with SAS

With SAS, you can specify what permissions are granted to the client. For example, you might grant ‘listen’ permissions, which allow the client to read from the Event Hub, but not ‘send’ permissions, which would allow the client to write to the Event Hub. You can also set the validity period for the SAS, including the start time and expiry time. This allows you to limit the duration of access granted by the SAS.

Configuring SAS

SAS rules can be configured at two levels in Event Hubs: at the namespace level, and the entity level. A namespace is a container for a set of messaging entities (Event Hubs, Kafka Topics, etc.). An entity is a specific instance of a messaging entity within a namespace. You can define SAS rules for a namespace, which apply to all entities within that namespace, or for a specific entity. However, you cannot define SAS rules for a consumer group, which is a view of an Event Hub or Kafka Topic that allows multiple consumers to read from the same Event Hub or Kafka Topic in parallel.

While SAS provides a powerful and flexible way to control access to Event Hubs resources, it’s important to use them securely. Microsoft recommends using Microsoft Entra credentials when possible, as they offer additional security benefits. SAS tokens should be protected and not shared publicly, as anyone who obtains a SAS token can use it to access the resources it grants permissions to until the token expires.

Event Consumers

In Azure Event Hubs, an event consumer is any application that reads data from the Event Hub.

Consumer Groups

In Azure Event Hubs, each consumer group is like a separate subscription to the event stream. It provides a unique view of the entire event stream. This allows multiple applications to read from the same Event Hub without interfering with each other. Each application can read at its own pace and maintain its own position in the stream.

Checkpointing

Checkpointing is a mechanism that allows an event consumer to mark its position in the event stream. This is crucial for resiliency. If a consumer disconnects from the stream, it can use the checkpoint to resume reading from where it left off.

Log Compaction

Log compaction is a feature that allows Azure Event Hubs to retain the last known value for each event key. This is different from time-based retention where events are purged after a certain period. Log compaction can be useful in scenarios where you stream the same set of updatable events.

Consuming Events with Azure Functions

Azure Functions can respond to events sent to an Event Hub event stream using triggers. Each instance of an Event Hubs triggered function is backed by a single EventProcessorHost instance. The trigger ensures that only one EventProcessorHost instance can get a lease on a given partition.

AMQP Channels

AMQP (Advanced Message Queuing Protocol) is the primary protocol of Azure Event Hubs. It provides structure for binary data streams that flow in either direction of a network connection. AMQP channels make data availability easier for clients. Azure Event Hubs supports connections over TCP port 5671, whereby the TCP connection is first overlaid with TLS before entering the AMQP protocol handshake.

Application Groups

An Application Group is essentially a collection of client applications that interact with the Event Hubs data plane. This means that any application that sends or receives data from Event Hubs can be part of an Application Group. The scope of an Application Group can be a single Event Hubs namespace or specific event hubs (entities) within a namespace.

Each Application Group is identified by a unique condition such as the security context of the client application. This could be Shared Access Signatures (SAS) or Microsoft Entra application ID. This unique identifier helps Azure Event Hubs to associate client applications with their respective Application Groups.

Policies within an Application Group control the data plane access of the client applications that are part of the group. These policies can be used to manage and control how the client applications interact with the Event Hubs data plane. Currently, Application Groups support throttling policies which can be used to limit the rate at which client applications can send or receive data.

Application Groups are available only in the premium and dedicated tiers of Azure Event Hubs. This means that you would need to be on one of these tiers to be able to use Application Groups.

Application Groups and Consumer Groups in Azure Event Hubs are separate concepts and have no direct association. A Consumer Group is a view (state, position, or offset) of an entire event hub. Depending on the Application Group identifier such as security context, one Consumer Group can have one or more Application Groups associated with it or one Application Group can span across multiple Consumer Groups.

Remember, client applications interacting with Event Hubs don’t need to be aware of the existence of an Application Group. Event Hubs can associate any client application to an Application Group by using the identifying condition. This allows for better management and control over client applications and their interactions with the Event Hubs data plane.

Quotas

Common Limits for All Tiers

- The Size of an event hub name is 256 characters. This is the maximum length of the name you can give to an event hub. It is the same across all tiers.

- Size of a consumer group name: Kafka protocol doesn’t require the creation of a consumer group. Kafka: 256 characters, AMQP: 50 characters. his is the maximum length of the name you can give to a consumer group. The limit is different for Kafka and AMQP protocols.

- Number of non-epoch receivers per consumer group: 5. This is the maximum number of non-epoch receivers that can be created per consumer group. An epoch receiver is a receiver that uses the epoch property to override other receivers. Non-epoch receivers are those without this property.

- Number of authorization rules per namespace: 12. This is the maximum number of authorization rules that can be created per namespace. Authorization rules define the rights of a client to perform various operations on the Event Hubs service like send, listen, and manage.

- Number of calls to the GetRuntimeInformation method: 50 per second. This is the maximum rate at which the GetRuntimeInformation method can be called. This method provides information about the runtime operation of an Event Hub.

- Number of virtual networks (VNet): 128. This is the maximum number of virtual networks that can be associated with an Event Hubs namespace.

- This is the maximum number of IP Config rules that can be set for an Event Hubs namespace. These rules define the IP addresses or IP ranges that are allowed or denied access to the Event Hubs service.

These limits are designed to ensure the service operates reliably and to prevent misuse. They apply to all tiers of the service, regardless of the specific features and capacities of each tier. If you need to exceed these limits, you may need to contact Azure support or consider other service options.

Basic vs. Standard vs. Premium vs. Dedicated Tiers

Limit | Basic | Standard | Premium | Dedicated |

Maximum size of Event Hubs publication | 256 KB | 1 MB | 1 MB | 1 MB |

Number of Event Hub consumer groups per Event Hub | 1 | 20 | 100 | 1000 (No limit per CU) |

Number of brokered connections per namespace | 100 | 5,000 | 10,000 per PU | 100,000 per CU |

Maximum TUs or PUs or CUs | 1 day | 7 days | 90 days | 90 days |

Number of partitions per event hub | 32 | 32 | 100 per event hub (with a limit of 200 per PU at the namespace level) | 1024 per event hub (2000 per CU) |

Choose Between Azure Messaging Services - Event Grid, Event Hubs, and Service Bus

Azure offers three services that assist with delivering events or messages throughout a solution: Azure Event Grid, Azure Event Hubs, and Azure Service Bus. Although they have some similarities, each service is designed for particular scenarios.

Azure Event Grid

Azure Event Grid is a service that routes events from any source to destination. It is designed to build applications with event-based architectures and to help automate operations, e.g., deploying infrastructure with Azure Resource Manager templates.

- Publish-Subscribe Model: Event Grid uses the publish-subscribe model. In this model, senders (publishers) categorize published events into classes without knowing the subscriber’s identity. Subscribers express interest in one or more classes and only receive events that are of interest, without knowing the identity of the publishers.

- Event Handlers: Event Grid has built-in support for events coming from Azure services, like storage blobs and resource groups. Event Grid also has support for your own events, using custom topics.

Azure Event Hubs

Azure Event Hubs is a big data pipeline. It facilitates the capture, retention, and replay of telemetry and event stream data. The data can come from many concurrent sources. Event Hubs buffers data and allows it to be read from the same system or multiple systems.

- Event Streaming: Event Hubs is designed to handle high throughput and low latency, which makes it useful for IoT, telemetry, logging, and streaming scenarios.

- Partitions: Event Hubs use partitions to provide data organization and throughput. This way, the data is organized and can be read in sequence.

Azure Service Bus

Azure Service Bus is a messaging infrastructure that sits between applications allowing them to exchange messages for improved scale and resiliency.

- Transactional Messaging: Service Bus is a brokered, message-based communication mechanism, it is not a distributed log system. It is more about message ordering and delivery without loss.

- Request/Reply Pattern: Service Bus is particularly useful in scenarios where you need to have two-way communication between the sender and the receiver. The pattern is common in scenarios where the sender and the receiver are not necessarily available at the same time.

Each of these services is designed for specific use cases, and often, they can be used together in a complementary way depending on the needs of your application.

Comparison of Services

|

Service |

Ideal For |

Key Features |

|

Azure Event Grid |

Event-based architectures, automation of operations |

Publish-Subscribe model, Event Handlers, Deep integration with Azure services |

|

Azure Event Hubs |

Receiving massive volumes of data, IoT, telemetry, logging, and streaming scenarios |

Event Streaming, Partitions for data organization and throughput |

|

Azure Event Bus |

High-value enterprise messaging, scenarios where sender and receiver are not necessarily available at the same time |

Transactional Messaging, Request/Reply Pattern |

Using the Services Together

Azure Event Grid, Event Hubs, and Service Bus can indeed be used together to create powerful, flexible, and robust event-driven architectures. Here’s how they can complement each other:

Event Grid and Service Bus: In a scenario where you have intermittent traffic, messages might arrive in a Service Bus queue or topic subscription but there might not be any receivers to process them. In such cases, Service Bus can emit events to the Event Grid when there are messages in the queue or subscription. You can then create an Event Grid subscription for your Service Bus namespaces, listen to these events, and react to the events by starting a receiver. This allows you to use Service Bus in reactive programming models.

Event Grid and Event Hubs: Event Grid can route events to Event Hubs. This is particularly useful when you want to capture and process a large volume of events in near real-time. For example, you could use Event Grid to trigger an Azure Function that processes an order and then sends an event to Event Hubs to update a real-time dashboard.

Service Hubs and Event Hubs: Service Bus and Event Hubs can be used together to handle different aspects of messaging. For instance, an e-commerce site can use Service Bus to process orders, while Event Hubs can be used to capture site telemetry. The order processing system (using Service Bus) and the telemetry system (using Event Hubs) can operate independently but can also be connected if needed.

In some cases, you might use the services side by side to fulfill distinct roles. For example, an e-commerce site can use Service Bus to process the order, Event Hubs to capture site telemetry, and Event Grid to respond to events like an item was shipped. In other cases, you might link them together to form an event and data pipeline.

Remember, the choice of using these services together depends on your specific use case and requirements. It is always a good idea to understand the strengths and capabilities of each service before deciding how to use them together. Each service is designed for specific use cases and often they can be used together in a complementary way depending on the needs of your application.