What is A/B Testing?

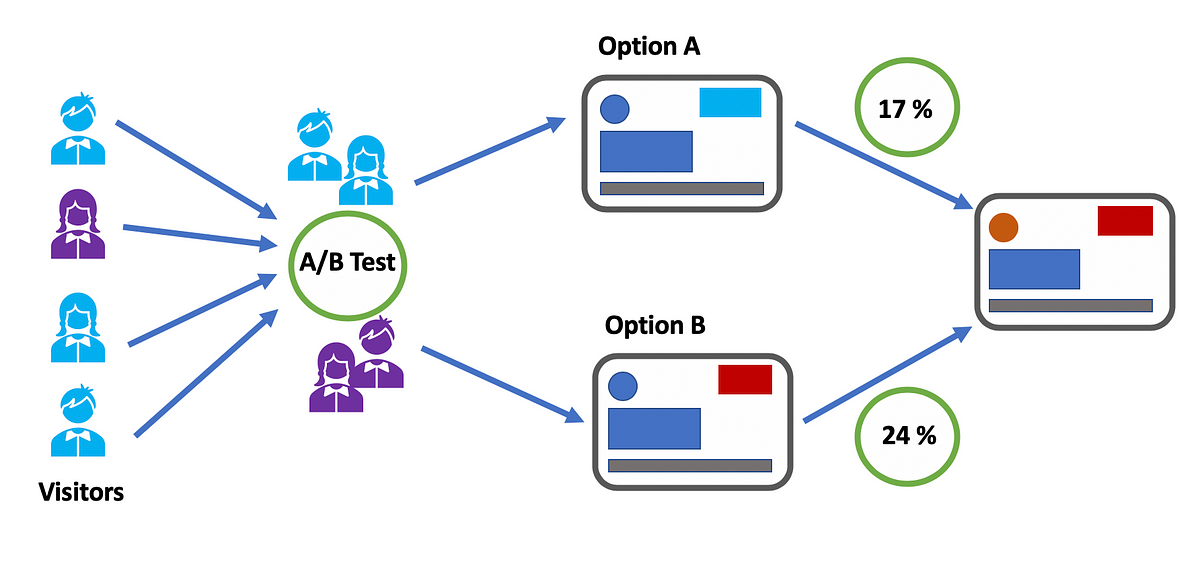

A/B testing, also known as bucket testing, split-run testing, or split testing, is a user-experience research methodology. It is a way to compare multiple versions of a single variable, for example by testing a subject’s response to variant A against variant B, and determining which of the variants is more effective.

In A/B testing, A refers to ‘control’ or the original testing variable. In contrast, B refers to ‘variation’ or a new version of the original testing variable. The version that moves your business metric(s) in the positive direction is known as the ‘winner’.

A/B tests are useful for understanding user engagement and satisfaction with online features like a new feature or product. Large social media sites like LinkedIn, Facebook, and Instagram use A/B testing to make the user experience more successful and as a way to streamline their services.

A/B testing is used by data engineers, marketers, designers, software engineers, and entrepreneurs, among others. Many positions rely on the data from A/B tests, as they allow companies to understand growth, increase revenue, and optimize customer satisfaction.

How Does A/B Testing Work?

A/B testing, also known as split testing, is a method used to compare two versions of a variable to determine which one performs better. Here’s how it works:

Identify the Variable to Test: This could be anything from a landing page, email, or pay-per-click (PPC) ad. You can use A/B testing to discover how changes to these variables affect outcomes, such as how decreasing the number of form fields in the checkout process increases purchases.

Create Two Versions: One version is the “control” group, or the version is already in use. The second version changes a single element. These versions are shown randomly to different users to avoid skewing the results.

Split Your Audience: Users are divided into two groups, the “A” group and the “B” group, and funneled into separate digital experiences.

Run the Test and Analyze the Results: Changes to your product or feature can be tested on small, segmented groups called cohorts to verify effectiveness while minimizing friction. The behaviors of the experimental group are compared with the behaviors of your control group to see which version produces the best results.

Repeat the Test: To improve the chances that your changes fuel the adoption of your product instead of eliciting churn, you should repeat the experiment using different segments.

A/B testing is a reliable tool used in a variety of situations. It helps product managers, marketers, designers, and more make data-backed decisions that drive real, quantifiable results. It’s used to optimize marketing campaigns, improve UI/UX, and increase conversions.

Benefits of A/B Testing

A/B testing offers several benefits that can help improve your website, app, or marketing campaign. Here are some reasons why you should consider A/B testing:

Improved User Engagement: A/B testing allows you to make data-driven decisions about changes to your content, leading to improvements that can drive user engagement. For example, testing different button colors on your website or app can reveal which color leads to more clicks.

Reduce Bounce Rates: By identifying and improving areas of your site that users quickly leave, or “bounce” from, you can enhance user experience and retain more visitors.

Increased Conversion Rates: A/B testing can help you understand what works best for your audience, enabling you to make changes that increase the likelihood of a user taking a desired action, such as making a purchase or signing up for a newsletter.

Risk Minimization: By testing changes on a small user group first, you can minimize the potential negative impacts of a change.

Effective Content Creation: A/B testing provides insights into what type of content resonates with your audience, helping you create more effective content in the future.

Data-Driven Decisions: A/B testing removes guesswork and provides quantifiable data, enabling you to make informed decisions.

Why Should You Do A/B Testing?

A/B testing allows people and companies to change user experiences while collecting data on the results. This helps them learn what changes impact user behavior the most. It’s not just for answering a single question but can be used to improve an experience or a goal like Conversion Rate Optimization (CRO) over time. testing, as long as the goals are clear and there’s a clear hypothesis.

For example, a B2B tech company may want to get better sales leads from campaign landing pages. To do this, they could use A/B testing to change things like the headline, subject line, form fields, call-to-action, and page layout. Testing one change at a time lets them see which changes affect visitor behavior. Over time, they can combine the effects of multiple successful changes to show the improvement of a new experience over the old one.

This method also allows user experience to be optimized for a desired outcome, making key steps in a marketing campaign more effective. By testing ad copy, marketers can learn which versions get more clicks. By testing the landing page, they can learn which layout converts visitors to customers best. The total cost of a marketing campaign can actually be lowered if each step’s elements work as efficiently as possible to get new customers.

A/B testing can also be used by product developers and designers to show the impact of new features or changes to a user experience. Things like product onboarding, user engagement, modals, and in-product experiences can all be improved with A/B testing, as long as the goals are clear and there’s a clear hypothesis.

A/B Testing Process

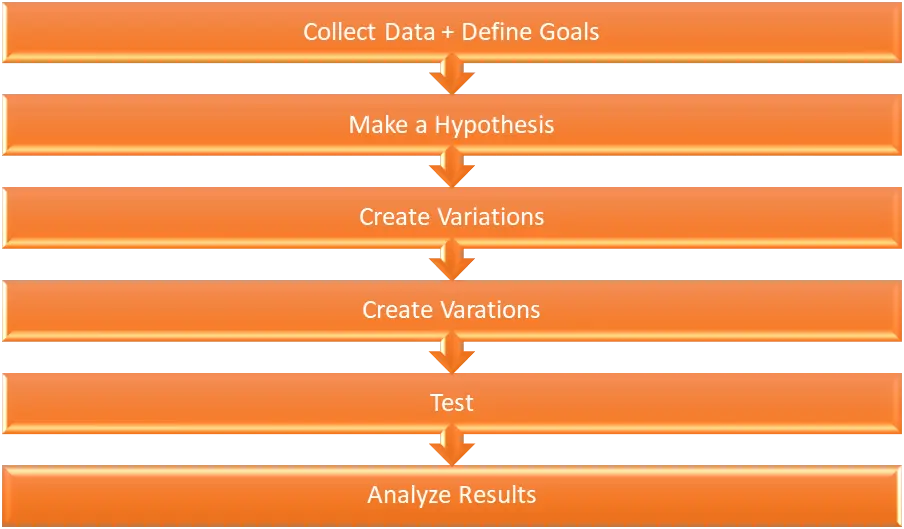

A/B testing, also known as split testing, is a marketing experiment where you split your audience to test variations of a campaign and determine which performs better. Here’s a basic process for conducting an A/B test:

Collect Data and Identify Goals: Your first step is to collect data. You will want to gather enough data from your site. Find the pages with low conversion rates or high drop-off rates that can be further improved. Also, calculate the number of visitors per day that are required to run this test on your website.

The next step is to set your conversion goals. These goals come from your business objectives. For example, if you have to increase the sale of clothes, your goals could be clear product images, increased site visit rates, or reduced shopping cart drop rates.

You need to define metrics that meet your business goals. A metric becomes a Key Performance Indicator (KPI) only when it measures something connected to your objectives. For instance, if your cloth store’s business goal is to sell clothes, the KPI of this business goal could be the number of clothes sold online.

Make a Hypothesis: Formulate a hypothesis about one or two changes you think will improve the page’s conversion rate. This hypothesis should be based on your research and should be specific, measurable, achievable, relevant, and time-bound.

Create Variations: Create a variation or variations of that page with one change per variation. This could involve changing the color of a call-to-action (CTA) button, altering the headline, or modifying the layout.

Run the Test: Divide incoming traffic equally between each variation and the original page. Monitor the performance of each variation over a specified period. This period should be long enough to gather statistically significant data.

Analyze Results: After the test, analyze the results to see which variation performed better. Use statistical analysis to determine if the differences in performance are statistically significant.

Note: A/B testing can be complex if you are not careful. It is important to test one variable at a time to ensure you don’t make incorrect assumptions about what your audience likes. The goal is to make data-backed decisions that can optimize your website and increase business ROI.

A/B testing helps marketers better understand what their users or visitors want to deliver to them and encourage a result. A common example is modifying landing pages to see which design results in higher conversions. The variation could be as simple as testing a headline or header image to see how users respond. The aim is to see which of the different versions is more popular with your customers.

What Can You A/B Test?

Headlines and Subheadlines: The headline is the first notable thing a user sees on your webpage. Headlines and subheadlines shape the first and last impressions of visitors and influence their decision to become paying customers. Testing different headlines can help you understand which ones grab your users’ attention and get them to engage with your content. You can test different lengths, tones (serious, humorous, etc.), or formats (question, statement, etc.).

Body: Your website’s main text or body should clearly articulate the benefits that visitors will gain. This message should align with the headlines and subheadlines, enhancing your website’s potential for conversion.

When creating your website content, consider the following aspects:

- Use a tone of writing that resonates with your target audience. Your content should address the user directly, responding to their queries. Incorporate key phrases that enhance the user experience and stylistic features that highlight important points.

- Use suitable headlines and subheadlines, break the text into digestible paragraphs, and format it for quick readers with bullet points or lists. This will make your content more accessible and engaging.

Navigation: Your site’s navigation can be better with A/B testing. Good navigation is key for a great user experience. Have a clear plan for your site’s layout and how pages link to each other.

Your site’s navigation starts at the home page. This is the main page where all other pages come from and link back to. Make sure your layout helps visitors find what they need easily. Each click should take visitors to the right page.

Here are some tips to make your navigation better:

- Put your navigation bar in the usual places like the top or the left side. This makes your site easier to use.

- Group similar content together to make your site’s navigation easier. For example, if you’re selling different types of earphones and headphones, put them all in one place. This way, visitors don’t have to search for each type separately.

- Keep your site’s structure simple and predictable. This can increase your conversion rates and make visitors want to come back to your site.

Call to Action (CTA): The CTA is a crucial element that prompts users to take a desired action. You can test different aspects of your CTA such as its wording (e.g., “Buy Now” vs. “Add to Cart”), color (which can affect its visibility), size (bigger CTAs might be more noticeable), and placement (e.g., top of the page vs. bottom).

Images: Images can significantly impact how users perceive your brand or product. You can test different types of images (illustrations vs. real-life photos), colors, sizes, or even the number of images on a page.

Product Descriptions: For e-commerce sites, product descriptions play a key role in influencing purchase decisions. You can test different writing styles (bullet points vs. paragraphs), lengths, tones, or the inclusion of customer reviews/testimonials.

Forms: Forms are often used to collect information from users. You can test the number of fields (shorter forms might lead to higher completion rates), types of fields (dropdown, checkbox, etc.), or even the wording of field labels.

Design and Layout: Businesses often find it hard to decide what is most important for their website. A/B testing can solve this. For example, if you run an online store, your product page is very important. With today’s tech, customers like to see everything in high quality before they buy. So, your product page needs to look good and be easy to use. The page should have text, images (like product photos), and videos (like ads). It should answer all questions without too being busy:

- Depending on what you sell, find fun ways to give all the needed info and product details. This helps buyers not get lost in messy text when they are looking for answers. Write clear text and show size charts, color options, and so on.

- Show both good and bad reviews for your products. Bad reviews make your store look real.

- Don’t confuse buyers with hard words just to make your content look fancy. Keep it short, easy, and fun to read.

- Use tags like ‘Only 3 Left in Stock’, countdowns like ‘Offer Ends in 1 hour 45 minutes’, or special discounts to make buyers want to buy the right way.

Other pages that need to look good are the home page and landing page. Use A/B testing to find the best version of these pages. Try lots of ideas, like using lots of white space and high-quality images, showing product videos instead of images, and trying different layouts. Use heatmaps, clickmaps, and scrollmaps to see where people click and what districts them. The cleaner your home page and landing pages are, the easier it is for visitors to find what they want.

Email subject lines: For email marketing, the subject line can greatly affect whether users open your email. You can test different lengths, tones, the use of personalization (including the recipient’s name), or the use of urgency/scarcity (e.g., “Limited time offer”).

Remember, the goal of A/B testing is to find what works best for your specific audience and context. It’s important to test one element at a time to accurately attribute any performance changes to the element you tested.

Mistakes You Should Avoid While A/B Testing

A/B testing is a powerful tool for optimizing digital content, but it’s not without its pitfalls. When conducting A/B tests, marketers and web designers often encounter a series of common mistakes that can skew results and lead to misguided decisions. These errors can occur at any stage of the testing process, from the initial planning phase to the final analysis of results. By understanding and avoiding these mistakes, you can ensure that your A/B tests provide accurate, actionable information that truly benefits your business. Let’s delve into these common A/B testing mistakes to avoid:

Before Testing

Unclear Hypothesis: Always start with a well-defined hypothesis for your A/B test. For instance, making the “Add to Cart” button more prominent will lead to an increase in conversions.

Testing Insignificant Changes: Minor changes like button size or text color usually don’t affect user behavior unless the initial design is poor. Focus on significant elements that influence user decisions.

Lack of a Defined Strategy: A/B testing is complex and requires a strategic approach.

Define what you are testing, the reasons for testing, and the metrics for success.

During Testing

Failure to Prioritize Test: Rank your test based on its potential impact and ease of implementation. This ensures you are focusing on the most beneficial opportunities.

Inappropriate Testing Tool: Choose a testing tool that aligns with your needs. Different tools offer various features, so pick the one that suits your strategy.

Overlooking Mobile Users: Mobile users may behave differently from desktop users. Ensure your testing tool supports mobile testing and incorporate mobile user behavior into your strategy.

After Testing

Premature Test Conclusion: Allow your test to run long enough to obtain statistically significant results. Early termination may lead to decisions based on incomplete data.

Neglecting Existing Customers: Don’t overlook the behavior and feedback of existing customers in your tests. They can provide valuable insights.

Remember, successful A/B testing involves careful planning, execution, and analysis. Avoid these pitfalls to ensure test results are valuable and actionable insights.

Different Types of A/B Testing

A/B testing is key to conversion rate optimization and user experience design. This method involves two or more variants against each other to see which one yields the best results. There are several types of A/B tests, including split testing, multivariate testing (MVT), and multi-page testing. Each type serves a unique purpose and provides valuable user behavior and preferences insights. Let’s delve deeper into each of these types.

Split Testing: This is a method where you divide your audience to test different campaign versions to see which one yields better results. Essentially, you create two distinct versions of a webpage – the original and a variation – and then direct half of your traffic to each version. The goal is to identify which version is more effective in achieving your specific goal. This method is especially useful when you want to compare two completely different design approaches.

A notable instance of split testing is from HubSpot, where they conducted a test on their trial offer. The test involved comparing a 30-day trial with a 7-day trial. The results were quite significant, with the 30-day trial boosting conversions by 110%.

BestSelf Co provides another example. They added a benefit-driven headline to their landing page, which originally had no headline. This simple change resulted in improved sales.

Multivariate Testing: MVT is a technique where you test different combinations of multiple variations on a webpage. Unlike A/B testing which tests one variable at a time, multivariate tests examine the effect of multiple changes simultaneously. For instance, if you want to test two different headlines, two images, and two button colors on the page, your MVT test will include all these variations.

A real-life example of MVT comes from an online business selling homemade chocolates. They tested two versions of three elements – product images, CTA button color, and product headline – on their product landing page. The goal was to identify the best-performing combination to increase the conversion rate.

Hyundai.io also used MVT on their car model landing pages. They wanted to understand which elements were influencing a visitor’s decision to request a test drive or download a car brochure.

Multi-Page Testing: This type of testing involves making the same changes across multiple pages. It is particularly useful when you want to understand how changes to a user journey across several pages affect user behavior.

The objective of these tests is to make data-driven decisions and enhance user experience. It is crucial to select the right type of test based on your testing objectives.

A general example of multipage testing could involve customizing website elements based on visitor information. For instance, for visitors from a specific location, a phone number could be included in the website footer. Similarly, for visitors arriving via an ad campaign, the “contact us” CTA in the navigation menu could be replaced with a “request demo” CTA.

A/B Test Results

A/B testing is a marketing experiment where you split your audience to test variations on a campaign and determine which performs better. Here are some key points to consider when interpreting A/B test results:

Understanding How A/B Testing Works: A/B test results are computed from click-through and conversion rates based on the events you send to the insights API. This computation can lead to different results from what you have on your business data. For example, Algolia might show different analytics results than other platforms, such as Google Analytics.

Automatic Outlier Exclusion: Outlier traffic can significantly skew the results, making it unrepresentative of real user traffic. Algolia’s A/B testing feature automatically excludes outlier users when calculating A/B test result metrics. A user qualifies as an outlier if the number of tracked searches performed by the user is 7 standard deviations (σ) more than the mean (𝜇) number of searches per user for the entire A/B test.

Looking at Your Business Data: As Algolia A/B testing is specific to search, the focus is on search data. It is best to look at the A/B test results in light of your business data to evaluate your test’s impact. For example, you can cross-reference search and revenue data to compute your interpretation of conversion or to look at custom metrics.

When to Interpret What: Algolia A/B test begins to show data in the dashboard after either 7 days have passed or 20% test completion, whichever happens first. This is because the initial data from A/B tests can be quite unstable, and making comparisons too early could result in inaccurate conclusions.

Variability in the Data: A/B test results can be quite volatile, especially when the sample size is small. Even after creating a strong testing hypothesis, it only takes one simple mistake during the analysis process to derail your whole efforts and make you come up with conclusions that can cost you valuable leads and conversions.

External Factors: External events could influence the behavior of users. For example, a major news event or a holiday could cause a temporary change in user behavior that might affect your test results.

Sampling Bias: If the sample size is not representative of the population, it could lead to biased results. For instance, if you run a test only on weekdays, it might not accurately represent user behavior on weekends.

Segmentation of A/B Testing

A/B test segmentation is a method where your audience is split into smaller, distinct groups, and A/B tests are conducted for each group. This approach allows for a more precise understanding of how changes affect each subgroup, rather than considering the audience as a whole.

In an A/B test, you can perform segmentation before and after the test. Pre-segmentation involves analyzing existing visitor data to form visitor segments for a test, while post-segmentation involves analyzing the test results.

Common filters for creating segments in A/B testing include geographical locations, device type, traffic source, user activity, time of activity, browser type, and custom segments specific to your business.

For instance, testing your checkout page for segments that frequently abandon the process can be more effective than testing for all visitors. Segmentation provides detailed insights into visitor behavior and makes effective use of your A/B testing tool’s bandwidth.

When segmenting A/B tests, it’s best to focus on larger segments and test new experiences for smaller segments with high conversion rates.

However, there’s no universal strategy. Just as professional sports teams adjust their strategies for different opponents, you can’t always apply a general A/B testing approach when you have multiple visitor segments on your digital platform.

Correlation Between A/B Testing and SEO

A/B testing and SEO can be used together to enhance the user experience and increase a website’s visibility on search engines. For instance, you can use A/B testing to experiment with different SEO strategies on your website, such as using different keywords, meta descriptions, and title tags. By comparing the performance of these different strategies, you can identify which ones are most effective and use this information to optimize your website.

Now let’s understand how A/B testing and SEO can help in the below areas:

No Cloaking: Cloaking is a black hat SEO technique where different content is presented to search engines and users. This is considered misleading and is against Google’s Webmaster guidelines. A/B testing can help avoid cloaking by allowing you to test changes to your website transparently. You can show different versions of a page to different users, but unlike cloaking, all users and search engines can see all versions. This way the integrity of your SEO efforts is maintained and you avoid penalties associated with cloaking.

Implementing rel=”Canonical”: The rel=”canonical” tag is a way to inform search engines about the preferred version of a webpage when duplicate or similar content exists. A/B testing can be used alongside this tag to test different versions of a page, identify the best-performing one, and set it as the canonical version.

Using 302 Redirects Over 301s: Redirects are a common part of webpage navigation. 302 indicates a temporary change and 301 indicates a permanent change. A/B testing is particularly useful with 302 redirects, as it allows for temporary redirection to a new page (version B) while keeping the original page (version A) intact. Once the test ends, the redirect can be removed, and any necessary can be made to the original page based on the test’s results.

Examples of A/B Testing

Website A/B Testing (HubSpot Academy’s Homepage Hero Image): HubSpot Academy found that among over 55,000 page views, just 0.9% of users engaged with the video on the homepage. Within this group, nearly 50% watched the entire video. Chat transcripts uncovered the importance of improving clearer messaging for this valuable and complimentary resource. Consequently, the HubSpot team started testing to evaluate the impact of clearer value propositions on user engagement and satisfaction. Three different variants were employed for the test, with the HubSpot Academy conversion rate (CVR) serving as the primary metric.

Email A/B Testing: Consider an online retailer who wants to increase sales through their email marketing campaign. They could create two versions of an email and send each version to a different segment of their email list. The version that results in more sales is then used for future campaigns.

Ad Campaign A/B Testing: Suppose a company is running a Google Ads campaign. They could create two different ads and monitor which one generates more leads.

Hypothesis A/B Testing: There could be many scenarios, such as:

- A company might hypothesize that the number of fields in their contact form could increase the number of sign-ups.

- A business could test whether changing the call-to-action test from “Download now” to “Download this free guide” increases the number of downloads.

- An app developer might test whether decreasing the frequency of app notifications from five minutes per day to two times per day improves user retention rates.

- A blog might test whether using images that are more closely related to the blog post content reduces the bounce rate.

- An email marketer might test whether personalizing email with the recipient’s name increases the click-through rate.

A/B testing aims to identify which version of a variable (such as a web page or email) has the most positive impact on business metrics. The ‘winning’ version is the one that improves your business metrics, and implementing this version can help optimize your website or email campaign and increase your return on investment.

A/B testing is a key component of Conversion Rate Optimization (CRO), a process that uses both qualitative and quantitative user data to understand user behavior and improve website features. If you’re not conducting A/B testing, you could be missing out on potential business revenue.

Whether you’re a B2B business dealing with unqualified leads, an eCommerce store battling high cart abandonment rates, or a media house struggling with low viewer engagement, A/B testing can help. By analyzing visitor behavior data, you can identify and address your visitors’ pain points, improving your conversion metrics across the board. This is true for all types of businesses, including eCommerce, travel, SaaS, education, and media and publishing.

A/B Testing Calendar

| Week | Start Date | End Date | Test Data | Conversion Metric | Sample Size | Notes |

| 1 | [Date] | [Date] | Headline A/B test: Short Vs. Long Headlines | Click-through rate (CTR) | 10K visitors per variation | Track secondary metrics like bounce rate and time on the page. |

| Call-to-action (CTA) button A/B test: Green Vs. Orange Button | Click-through rate (CTR) and conversion rate | 5K visitors per variation | Consider A/B testing CTA copy alongside button color. | |||

| 2 | [Date] | [Date] | Product image A/B test: Lifestyle vs. Close-up Image | Add to cart rate and purchase rate | 7.5K visitors per variation | Use high-quality images for both variations. |

| Product description A/B test: Bullet points vs. Paragraph | Add to cart rate and purchase rate | 5K visitors per variation | Ensure both descriptions convey the same information clearly. | |||

| 3 | [Date] | [Date] | Email subject line A/B test: Curiosity vs. Scarcity | Email open rate and click-through rate | 5K subscribers per variation | Personalize subject lines based on subscriber segments. |

| Landing page A/B test: Single column vs. Multi-column layout | Conversion rate and time on page | 7.5K visitors per variation | Consider mobile-friendliness when testing layouts. |

- Clearly define your test goals and metrics. What are you trying to achieve with each test? How will you measure success?

- Choose a statistically significant sample size. This ensures your results are reliable and can be generalized to your entire audience.

- Control for external factors. Avoid running tests during major holidays or promotional periods that could skew your data.

- Analyze your results carefully. Don’t just look at the headline numbers; dig deeper into user behavior to understand why one variation performed better than the other.

- Implement the winning variation. Once you’ve identified the clear winner, update your website or marketing materials accordingly.

Statistical Approach for A/B Testing

When conducting an A/B test, you can choose between two primary statistical methods: the Frequentist method and the Bayesian method.

The Frequentist method is the traditional approach to A/B testing. It involves hypothesis testing using T-tests or Z-tests and determining statistical significance based on p-values. This method requires that the sample size be predetermined before examining the data. One key aspect of this approach is that it doesn’t allow for ‘peeking’ – checking the results before the planned sample size is reached. Doing so can lead to an increased rate of false positives.

On the other hand, the Bayesian method involves a four-step process:

- Establish your prior distribution.

- Select a statistical model that aligns with your beliefs.

- Execute the experiment.

- Update your beliefs based on the observed data and calculate a posterior distribution.

The choice between these two methods depends on the specific context and requirements of your test. Understanding each approach’s assumptions and implications is crucial before deciding. Also, remember to repeat your tests to enhance the accuracy of your results.

Challenges of A/B Testing

In A/B testing, several challenges can impact the effectiveness and accuracy of your results. Let’s delve into each of these challenges in detail.

Challenge #1: Average-Centric Approach

The Average-Centric Approach in A/B testing means we look at the average effect of a change on all users. It is a popular method because it is simple and gives a single metric for the impact of a change.

But, this method can miss important details. It might not catch that the change can affect different groups of users in different ways.

For example, let’s say a website introduces a new feature to make it easier to use. The average effect might be positive, meaning most users find the new feature useful.

But if we look closer, we might see that the new feature is very useful for new users who were finding the website difficult before, but not so much for old users who were already comfortable with how things were. So, the average effect doesn’t show that the new feature is good for one group (new users) and not so good for another (old users).

So, while the Average-Centric Approach can give a good overall view of the impact of a change, it’s also important to look at how the change affects different user groups. This can give more detailed insights and help make better decisions.

Challenge #2: Overlooking User Interactions

When you do A/B testing, you might forget that users talk to each other. This is called “Overlooking User Interactions”. Here’s why it matters:

Suppose you have a new feature, Feature A. You show it to half of your users. The other half sees the old feature, Feature B.

Now, let’s say a user likes Feature A and tells others about it. This can change how users feel about Features A and B. Even users who only saw Feature B might start to feel they are missing out.

This can make your A/B test results biased. It might look like Feature A is better than it really is. So, when you do A/B tests, remember to think about how users influence each other. If you don’t, your test results might lead you to the wrong conclusions.

Challenge #3: Brief Testing Duration

When we test something for a short time, it’s called ‘Brief Testing Duration’. This has some drawbacks:

- Users Need Time: When something new comes up, users might not like it at first. But, they might start liking it as they use it more. A short test might not see this change.

- Not Enough Data: If the test is short, we might not get enough data to make good decisions. This is true for things that users don’t use every day.

- Missing Long-Term Effects: Some problems only show up after using something for a long time. A short test might miss these problems.

- Seasonality Matters: If we test something for a short time, we might not see how seasons affect it. For example, a holiday shopping feature might not work the same in all seasons.

So, short tests can give quick feedback. But, we should also do long tests to understand how users really feel about the product or feature.

Challenge #4: Limited User Base

A/B testing is a way to test changes to your webpage against the current design and determine which one produces better results.

Now, let’s suppose you have a limited user base. This means that the number of users who are exposed to each version (A and B) of your test is small.

Statistical significance is a measure of whether the results of your test are likely to be due to chance or the change you made. If your test results lack statistical significance, it means that the data you’ve collected isn’t strong enough to make a solid conclusion.

Here’s why a limited user base can lead to a lack of statistical significance:

- Insufficient Data: With a small user base, you may not have enough data to reach a statistically significant result. This could lead to uncertainty in the results.

- Increased Variability: With fewer users, the behavior of just one or two users can have a big impact on the results. This can increase the variability of your results and make it harder to draw accurate conclusions.

- False Conclusions: Because of the above points, you might see a change in user behavior that seems to be caused by the changes you made, but is actually just due to chance. This can lead to false conclusions about the impact of the change.

So, when conducting A/B tests, it’s important to ensure that you have a large enough user base to achieve statistically significant results and make accurate conclusions. Otherwise, the results might mislead you into making changes that don’t actually improve the user experience.

Challenge #5: Long-Term Tests

Long-term tests are studies or experiments that are conducted over an extended period. These tests can range from a few months to several years, depending on the nature of what is being studied.

The misconception that long-term tests are not worth conducting often stems from the desire for quick results. In today’s fast-paced world, there is a tendency to favor tests that can provide immediate insights. However, this approach can overlook the importance of understanding long-term effects.

Here’s why long-term tests are crucial:

- Understanding Long-Term Effects: Some effects only manifest over a long period. For instance, the impact of a new drug, environmental changes, or educational methods may not be apparent immediately but can become significant over time.

- Detecting Rare Events: Long-term tests increase the chances of observing rare events that might not occur in a shorter timeframe.

- Stability and Consistency Check: They allow us to check the stability and consistency of a system or a process over time. This is particularly important in fields like medicine, environmental science, and product development.

- Valuable Insights: Long-term tests can provide valuable insights that short-term tests cannot. They can reveal trends, patterns, and effects that only become noticeable over a longer period.

In conclusion, while long-term tests do require more time and resources, the insights they offer can be invaluable. They allow us to understand the full implications of a process, treatment, or phenomenon, making them an essential tool in many fields of study.

Challenge #6: Technical Complexity

The implementation of A/B tests can be technically challenging due to issues like original content flashing, alignment issues, code overwriting, and integration difficulties.

Let’s break down the technical complexities involved in implementing A/B tests:

- Original Content Flashing: This is also known as “Flash of Original Content” or FOOC. It happens when the original content on a webpage is briefly visible before the test variation loads. This can be disorienting for users and may affect the results of the A/B test. It’s usually caused by the slow loading of the testing script or the test variation.

- Alignment Issues: These occur when the elements in the test variation do not align properly with the rest of the page. This could be due to differences in CSS styles, HTML structure, or dynamic content that changes the layout. It can make the page look broken or unprofessional, which could influence the user’s behavior and skew the test results.

- Code Overwriting: A/B testing often involves changing elements on a webpage using JavaScript. If the same element is manipulated by other scripts on the page, there could be conflicts that cause the test variation to not display as intended. This is why it’s important to have good coordination and code management practices in place.

- Integration Difficulties: A/B tests may not always play well with other systems integrated into your website, such as analytics platforms, content management systems, or personalization tools. There could be issues with tracking test data, displaying personalized content, or maintaining a consistent user experience across different systems.

These challenges highlight the importance of having a well-planned and coordinated approach to A/B testing, including thorough QA processes, collaboration between teams, and careful selection of testing tools. It’s not just about deciding what to test, but also ensuring that the test is implemented correctly and that the results are reliable.

Challenge #7: Insufficient Sample Size

In A/B testing, you’re comparing two versions (A and B) of something to see which performs better. This could be a webpage, an email campaign, or any other item where you’re trying to measure the effect of a specific change.

The sample size refers to the number of observations or individuals in any statistical setting. In the context of A/B testing, it’s the number of users who are exposed to each version of the test (A and B).

If the sample size is too small, it can lead to inaccurate results. Here’s why:

- Statistical Power: A small sample size may not have enough power to detect a difference, even if one exists. Statistical power is the probability that the test correctly rejects the null hypothesis (i.e., the assumption that there is no effect or difference).

- Variability: With a smaller sample size, there’s a higher chance of getting results that don’t represent the entire population. This is because the sample may not capture the full range of variability in the population.

- Margin of Error: The margin of error of a statistic is the amount of random sampling error in its estimate. The smaller the sample size, the larger the margin of error, and the less confidence we can have in the results.

- Overfitting: Small sample sizes can lead to overfitting, where the results may fit the sample data very well but perform poorly on new, unseen data.

In summary, while a small sample size might be easier and quicker to test, it can lead to inaccurate results and conclusions. It’s always important to ensure your sample size is large enough to represent the population you’re trying to understand. This will help to ensure the validity and reliability of your A/B test results.

Challenge #8: Premature Result Checking

Premature Result Checking in the context of A/B testing refers to the practice of checking the results of an A/B test before the test has reached its predetermined sample size.

Here’s why it can lead to false conclusions:

- Fluctuation in Results: In the early stages of an A/B test, results can fluctuate significantly. An initial trend might seem promising, but as more data is collected, this trend could reverse.

- False Positives/Negatives: If you stop the test early when a result seems significant, you increase the risk of a false positive (Type I error), where you conclude there’s a difference when there isn’t one. Similarly, you could also increase the risk of a false negative (Type II error), where you conclude there’s no difference when there actually is one.

- P-Hacking: Continually checking your results and stopping the test as soon as you see a significant result can lead to p-hacking. This is a practice where people misuse data analysis to find patterns in data that can be presented as statistically significant, thus leading to incorrect conclusions.

Therefore, it’s crucial to determine an appropriate sample size before starting the test and resist the temptation to check results prematurely. This helps ensure that the results of your A/B test are reliable and statistically valid.

Challenge #9: Changing Allocation During Test

A/B testing is a method of comparing two versions of a webpage or other user experience to see which one performs better. It’s a way to test changes to your webpage against the current design and determine which one produces better results.

In an A/B test, you take a webpage or user experience and modify it to create a second version of the same page. This change can be as simple as a single headline or button, or be a complete redesign of the page. Then, half of your traffic is shown the original version of the page (known as the control) and half is shown the modified version of the page (the variation).

The results of an A/B test are then studied to determine which version showed the greatest improvement.

Now, if you change the distribution of users between the A/B test and control groups during the test, it can distort the results. Here’s why:

- Bias in User Behavior: Users who were initially in the control group and later moved to the test group may behave differently than those who were in the test group from the start. This is because their experience with the website has been influenced by the control version.

- Inconsistency in Data: Changing the distribution of users during the test leads to inconsistency in data collection. The data collected before and after the change in distribution may not be comparable, leading to inaccurate results.

- Statistical Significance: A/B tests rely on statistical principles to determine if the results are significant (i.e. if they are likely to occur again in the future). Changing the distribution of users during the test can affect the statistical significance of the results.

Therefore, it’s recommended to keep the distribution of users between the A/B test and control groups consistent during the test to ensure accurate and reliable results.

Challenge #10: Ignoring Lessons from Unsuccesful Tests

Ignoring lessons from unsuccessful tests means not paying attention to the results of A/B tests that didn’t improve things or make things worse. This is a mistake because even “failed” tests can teach us important things. For example, they can show us what doesn’t work for your audience, which is just as important as knowing what does.

Every test gives us data that can lead to insights. Even if a test doesn’t show a big difference, it still gives us data about how users behave which can help us make decisions in the future.

We should look closely at all tests, not just the successful ones. Unsuccessful tests can give us insights into how users behave and what they prefer and can show us potential problems with the test design or execution.

Knowing why a test didn’t give positive results is key to improving future tests. This could be because of a variety of reasons, like poor test design, not enough sample size, or simply that the change didn’t resonate with users.

By learning from unsuccessful tests, you can refine your hypotheses, improve your test design, and increase the chances of running successful tests in the future.

In short, every A/B test—whether it works or not—gives us valuable data. It’s important to look at these tests to understand why they didn’t work and how to do better in the future. If we ignore the lessons from unsuccessful tests, we miss a chance to learn and improve.

Challenge #11: Low-Traffic Websites

A/B testing is easy to understand, but it can give unreliable results for websites with low traffic.

Why? Because A/B testing needs a lot of data to work well. If your website has lots of visitors, you can collect this data quickly. However, if your website has few visitors, it can take a long time to collect enough data. During this time, many things can change, like market trends or customer behavior. This can make your results less reliable.

Also, with low traffic, a few users can have a big impact on the results. If one or two users have a really good or bad experience, it can change the results a lot.

So, A/B testing is a great tool, but it might not work as well for low-traffic websites. You need to think about this when you’re deciding how to make your website better.

Challenge #12: Sample Data Bias

When we collect data, we need to make sure it represents everyone fairly. This is called avoiding Sample Data Bias. If we don’t do this, our results might not be correct.

In A/B testing, we compare two groups (Group A and Group B). If these groups are different in some way, like if Group A is new users and Group B is returning users, then our test might not be fair.

If we make decisions based on unfair tests, we might make mistakes. We could think a change is good when it’s not, or bad when it’s good. We might spend time and money on the wrong things. And if users don’t like the changes we make, they might stop trusting us.

To avoid these problems, we need to pick our test groups carefully. They should be random and represent everyone we’re interested in. We can also use special math methods to check for and fix any unfairness.

Challenge #13: Designing Tests That Yield Significant Results

A/B testing is when you compare two versions of a webpage to see which one is better. However, not all A/B tests give important results. This can happen if there aren’t enough users in the test, the test is too short, the changes are too small, or your measurements change a lot.

Even if a test doesn’t give important results, it still uses up time and resources. This includes the time to set up and run the test, the computer resources to run the test and look at the data, and the lost chance to use a better design while the test is running.

So, it’s important to plan your tests well. You should have a clear idea of what you’re testing and how you’ll look at the results. This can help make sure your A/B tests are useful and lead to real improvements in your user experience.

Bottom Line

A/B testing is a method that helps you make decisions based on data. It involves comparing two versions of a campaign or content to determine which one is more effective. This technique aids in pinpointing areas for enhancement, implementing significant changes based on the outcomes, and comprehending user behavior.

When you have a theory based on research and observations and want to confirm if your solution is correct, A/B testing can be very beneficial. However, A/B testing is best suited for achieving gradual improvements and may not be the best testing solution for launching new projects or implementing major changes.

A/B testing can be complex and necessitates careful planning and execution. For example, it is essential to have a well-formulated hypothesis to test, consider dividing your traffic before testing, and ensure that the testing duration is sufficient to draw reliable conclusions.

In conclusion, A/B testing is a crucial element of effective marketing and product development strategies. It allows businesses to fine-tune their services based on their actual user responses and behaviors.